Ethics and Governance of AI at Berkman Klein: Report on Impact, 2017-2019

Over the last two and a half years, the Ethics and Governance of AI Initiative at the Berkman Klein Center has pursued an interdisciplinary approach to exploring and addressing novel challenges posed by artificial intelligence. We are excited to highlight five key areas of ongoing impact from the many projects that are part of this initiative, which have developed in dialogue with contributions to this field from across our community and programs. Under the leadership of Jonathan Zittrain and Urs Gasser, the Berkman Klein Center has contributed to the debate about online disinformation, influenced the use of algorithms in the criminal justice system, supported the development of inclusive AI governance frameworks around the world, engaged the private sector in enhancing societally beneficial uses of AI, and developed new approaches to educating students and practitioners about the social impacts of AI. Below we describe some of these activities and their impacts.

1. Contributed deeply to the public dialogue about information quality and online propaganda.

Through a mix of groundbreaking research and convenings, the Media and Information Quality track of our AI Initiative programming has brought together diverse stakeholders to map the effects of automation and machine learning on content production, dissemination, and consumption patterns, while evaluating the impact of these technologies on societal attitudes, human behavior, and democratic institutions.

In collaboration with Ethan Zuckerman’s team at the Center for Civic Media, HLS Professor Yochai Benkler and a team at the Berkman Klein Center – including Research Director Rob Faris and BKC Fellow Hal Roberts – have mapped the online media ecosystem during key moments, such as the US 2016 presidential election. Leveraging the Media Cloud platform, an open source system for analyzing media ecosystems, the team conducted groundbreaking work on the relationship between misinformation online, elections, and healthy democracies, culminating in their 2018 book Network Propaganda. Their findings, which center on the structural differences between right-wing and left-wing media ecosystems, have – to quote New Yorker staff writer Jeffrey Toobin – “profound implications not only for the study of the recent past but also for predictions about the not-so-distant future.” Research collaborators in locations such as France, Germany, Spain, and Colombia are currently replicating the research methodology outlined in Network Propaganda in an effort to better understand the online media ecosystem’s impacts during their own elections.

The Berkman Klein Center team is developing new methods for measuring and analyzing the structural characteristics of digital media ecosystems, such as ideology and influence, and helped conceive of and implement the AI and the News Open Challenge, which allocated $750,000 to seven distinct projects working to encourage and shape the impact that artificial intelligence is having on the field of news and information. We have also focused on driving content governance policy across the private and public sectors. On the public side, we’ve done so through our foundational work around the “information fiduciaries” proposal – an ongoing effort to explore legal structures under which data-driven internet companies would owe a duty of loyalty to their users. On the private side, we’ve worked with technology platforms to better understand how they are addressing issues of information quality, harassment, and freedom of expression, particularly in the context of developing and deploying machine learning, and hosted a lunch talk and community workshop this past fall with Monica Bikert, Facebook’s Head of Global Policy Management, as well as a “spelunking” expedition to Facebook in January 2018 to get an inside look at the company’s content moderation practices.

2. Influenced policymaking and practices around the use of algorithms in the criminal justice system.

The Algorithms and Justice track of the AI Initiative has explored ways in which government institutions incorporate algorithms and machine learning technologies into their decision-making, with a focus on the criminal justice system. Our work examines ways in which development and deployment of these technologies by both public and private actors impacts the rights of individuals and efforts to achieve social justice.

Researchers at the Berkman Klein Center’s Cyberlaw Clinic have provided analysis and advocacy around the use of risk assessment algorithms – statistical tools used across the US criminal justice system to inform decisions on the basis of an individual’s estimated risk of recidivism or some other negative outcome. While such tools ostensibly promise a greater degree of rigor and consistency in criminal justice decision making, their use can easily yield discriminatory outcomes (as we’ve communicated to the Massachusetts Legislature, and argued, alongside partners at the MIT Media Lab’s Humanizing AI in Law project, in an open letter and widely-cited paper). The prevalence, use, variety, and efficacy of such tools is currently poorly understood, and to address that the Cyberlaw Clinic is developing the Risk Assessment Tools Database (here are screenshots of a development version), which is gathering data on where and how particular risk assessment tools are used, as well as analysis relating to the characteristics and quality of the tools themselves. In close collaboration with specialized technical researchers, policymakers, and social scientists, the database will be a resource capable of supporting multiple sets of decision makers, from activists and lawyers to legislators and those tasked with procuring criminal justice algorithms.

3. Supported the development of inclusive AI governance frameworks, both within the United States and on a global scale.

The Global Governance track of the AI initiative has explored the ethical, legal, and regulatory challenges associated with the development, deployment, and use of AI around the world. The track emphasizes direct engagement with policymakers and AI creators to develop resources and frameworks to support the development and deployment of AI for the public good.

Domestically we’ve launched the AGTech Forum to bring state attorneys general and their staffs up to speed on issues related to privacy, cybersecurity, and – under the AI Initiative – AI and algorithms. State AGs play an increasingly important role in establishing rules of the road for technology businesses and are well-positioned to act as first-movers on novel regulatory and enforcement decisions regarding applications of AI. Through the Forum, and with the support of the National Association of Attorneys General and the Harvard Law School Attorney General Clinic, we’ve hosted officials from 36 offices over the course of four biannual forums. Attendees have represented a diverse set of AGs, chief deputies, division chiefs, and line attorneys, alongside thoughtfully selected technologists and legal experts drawn from academia, civil society, and industry. AI-centric AGTech convenings have invited AGs to learn about the impacts of AI on a multitude of state enforcement and policy areas, including consumer protection and privacy, anti-discrimination and civil rights, antitrust and competition, labor rights, and criminal justice.

On a global scale, we’ve advised high-level policy-makers and influenced national AI strategies by working with leaders and entities including Chancellor Angela Merkel, the ITU’s Global Symposium for Regulators, the Canadian government, and the United Nations’ High Level Committee on Programmes. We provided expert guidance to the OECD’s AI Governance Expert Group, which proposed high-level AI principles adopted by 42 countries. This work has enabled us to make a direct impact on regulatory regimes and establish relationships through which we can introduce our tools and research to high-leverage audiences.

Of course, no one center or institution can address these highly global AI-related challenges on its own. To that end, we have cultivated and highlighted AI expertise across the global Network of Centers working issues at the intersection of technology and society. We’ve enabled new AI-governance institutions in Singapore (SMU Center for AI & Data Governance), Thailand (EDTA in Bangkok), and Taiwan (Center for Global AI Governance at NTHU). Using the Network of Centers as a platform, we’ve launched a significant project on AI and Inclusion through a symposium including 170 participants from 40 countries. A critical output of this project has been a global roadmap for inclusive AI development, which reaches far beyond our own operational context to galvanize work around the world and was adopted by one of the large, Canada-based international development agencies. Through our capacity-building work across the Network of Centers, we are facilitating a critical process by which academic research centers will be able to conduct impact-oriented, contextually responsive, and relevant research and development work around responsible AI.

4. Engaged industry in developing socially beneficial paths for AI technology.

Just as we’ve worked with the public sector to develop policy frameworks and advocacy efforts around AI issues, we’ve also worked closely with private-sector firms that are developing and deploying AI technologies. Importantly, we have focused on both the “supply” side of AI – the handful of tech companies driving technical breakthroughs in the space – and also on the “demand” side – companies which are deploying off-the-shelf AI technology across their products. These demand-side companies, which often go unmentioned in the conversation around AI ethics, are no less a part of where the rubber meets the road when it comes to the social impacts of AI.

BKC’s Challenges Forum is a venue for frank closed-door conversations with organizations developing and deploying AI about the ethical, legal, social, and technical challenges they face. The Challenges Forum has hosted a number of events, convening nearly a dozen organizations from across numerous industries, ranging from small startups to Fortune 500 companies. Through these meetings, select members of BKC’s interdisciplinary community have provided guidance and feedback to organizations trying to square performance and profit considerations with social and ethical aims. We are turning these problem-oriented discussions into a pipeline for real-world AI case studies, and are currently working with three organizations on case studies about their own AI challenges.

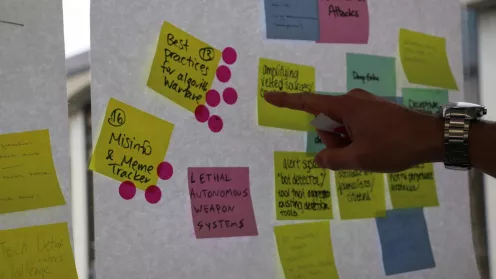

We’ve also sought to create a space for talent from industry to contribute to AI projects in the public interest. The Assembly program, hosted by the Berkman Klein Center, annually convenes a cohort of individuals drawn from industry, academia, and government – with a strong emphasis on the first – to work on projects in technology aimed at advancing the social good. These projects, built out over the course of a three-month development period, range from conference workshops and investigative research to live prototypes with running code. For the last two years, Assembly has focused on AI-related problems under the auspices of the AI Initiative. Each year’s cohort is curated from a large applicant pool – more than 350 individuals applied last year – to include disparate but co-applicable skill sets. Each cohort includes a diverse mix of engineers, designers, product managers, academic researchers, policy experts, and standout contributors from other disciplines. This year’s participants included, among others, machine learning PhDs, Google AI developers, a senior technology policy advisor to Washington Governor Jay Inslee, and a US Navy SEAL officer.

Over the last two years, Assembly has produced a number of extremely impressive outputs. For example, Assembly 2018’s Data Nutrition Project, now an independent nonprofit entity, has created substantial momentum around creating new standards and formats – inspired by the FDA’s nutrition labels for food products – for assessing and labeling datasets. This year the team presented at SXSW, and, supported by a recent contribution from the Ethics and Governance of AI Initiative’s third-party grant pool, is hiring additional staff to further develop its prototypes. And the products that come out of Assembly are only half of the story. Assembly has also generated a rich network of socially aware AI practitioners – engineers, product managers, and designers who have brought the program’s lessons back to their companies. One Assembler from the 2019 cohort is transitioning into a full-time ethicist role at his company based on his experiences in the program.

5. Developed new approaches to teaching students and the public at large about the social implications of AI technology.

As a countervailing force to the landscape of larger private and public sector efforts driving the development of AI, the initiative focuses a portion of its efforts on education and initiatives at the University level, convening diverse perspectives and expertise, and translating scholarship into actionable guidance and creating engagement forums for faculty, students, and practitioners.

During the 2018-2019 academic year, we successfully piloted the Techtopia program – a student-faculty research initiative dedicated to developing pedagogy and research around tech’s biggest problems. In September, following a highly competitive application process, we admitted an inaugural cohort of 17 students with representation from nine of Harvard’s schools. In the months since, members of the strikingly tight-knit cohort have attended biweekly seminars taught by affiliated faculty members, researched alongside those faculty members as RAs, and developed ambitious capstone projects. Those projects, conceived by the Techtopia students themselves and advised by faculty, included a guide to procuring algorithmic systems aimed at municipalities, and a series of creative “artifacts” exploring the relationship between AI technology and human emotion. Equally impressive has been the Techtopia Faculty and Friends community, a group of faculty and staff from across the university who have come together to design and support the program. Faculty and Friends engagement has occasioned ambitious cross-disciplinary partnerships – both logistical and substantive – of a kind rarely seen otherwise. For the 2019-2020 academic year, Techtopia will become the Assembly Student Fellowship, with interfaces to the broader Assembly initiative and the external practitioners and experts who are part of the initiative.

As we’ve built new pedagogical models aimed at making social impact a key consideration in technical education, we’ve also conducted research on how AI – as it exists today – is shaping the lives of younger students. In May of 2019, the Berkman Klein Center’s Youth and Media team launched a new report entitled Youth and Artificial Intelligence: Where We Stand. The widely-read report, which informs UNICEF’s AI Policy Guidance on Children and their rights, examines some of the ways in which AI technology is reconfiguring the experiences of learners starting in early childhood, and flags a number of key questions for teachers and policymakers. Key challenges highlighted by the YAM team include privacy and data handling concerns, the potential amplification of patterns of social bias, and a growing global knowledge gap around autonomous systems.

Our educational work has sought to reach beyond the classroom through mediums like data visualization and public art. The Principled Artificial Intelligence Project, launched at the Cyberlaw Clinic in June 2019, is working to map the thicket of guidelines, standards, and best practices around AI development and deployment. The AI Initiative has also fed into the Harvard metaLAB's AI + Art portfolio of installations, talks, publications, and teaching. Led by metaLAB Senior Researcher Newman, the AI + Art initiative has produced 10 projects, staged more than 45 exhibitions in 11 countries, been covered in more than 25 articles, taught nine workshops and courses, and given over 50 public talks.

For more information about Berkman Klein’s work on the Ethics and Governance of AI, visit https://cyber.harvard.edu/topics/ethics-and-governance-ai