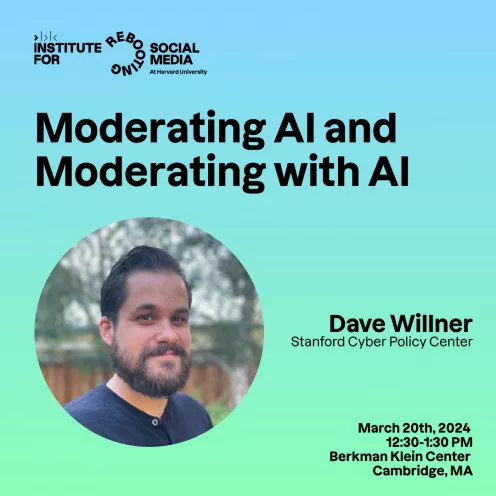

Moderating AI and Moderating with AI

RSM Speaker Series

RSM welcomes Dave Willner for a talk on the promises and perils that foundation models present for the field of content moderation.

The rise of increasingly powerful foundation models – particularly large language models (LLMs) and large multimodal models (LMMs) – will fundamentally transform the practice of content moderation. This is true both because of the novel risks and features of the models themselves, as has been much discussed, and because of the less explored ways in which these models can and will be used to solve previously intractable problems in moderation. While the exact contours of the new terrain of moderation have not yet fully come in to view, it is possible to anticipate the changes we will see by pairing a thorough understanding of the core challenges of scaled content moderation with the core advantages provided by foundation models.

Dave Willner started his career in at Facebook helping users reset their passwords in 2008. He went on to join the company’s original team of moderators, write Facebook’s first systematic content policies, and build the team that maintains those rules to this day. After leaving Facebook in 2013, he consulted for several start ups before joining Airbnb in 2015 to build the Community Policy team. While there he also took on responsibility for the Quality and Training for the Trust team. After leaving Airbnb in 2021, he began working with OpenAI, first as a consultant and then as the company’s first Head of Trust and Safety. He is currently a Non-Resident Fellow in the Program on Governance of Emerging Technologies at the Stanford Cyber Policy Center.

You might also like

- newsHayley Song Q&A:

- communityRewiring Democracy Now