Media Cloud

Overview

Media Cloud is a project that tracks news content comprehensively – providing open, free, and flexible tools. This will allow unprecedented quantitative analysis of media trends. For instance, some of our driving questions are:

- Do bloggers introduce storylines into mainstream media or the other way around?

- What parts of the world are being covered or ignored by different media sources?

- Where do stories begin?

- How are competing terms for the same event used in different publications?

- Can we characterize the overall mix of coverage for a given source?

- How do patterns differ between local and national news coverage?

- Can we track news cycles for specific issues?

- Do online comments shape the news?

You can see some simple visualizations generated out of our system on the main site for Media Cloud, but the project is under very active development and there is much more under the hood.

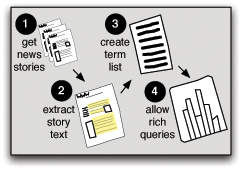

Our ideas break down roughly into the the components of our system.

Ideas

Get News Stories

We currently have a perl script that acts as a crawler of all news stories. It works by checking the relevant RSS feeds for new stories, requesting the page, and (if necessary) programmatically determining what the "next page" link is and requesting each additional page. It is working fairly well in most cases, but with an arbitrary number of new feeds and sites, you can imagine that it sometimes breaks down. There are various ways in which the crawler could be improved, including better fault tolerance, error reporting, and paging. The paging ("next page" detection) is a particularly interesting -- and more generalizable -- computer science problem.

Extract Story Text

We think that this part of the system is particularly interesting. The problem could be stated as: "given an arbitrary web page believed to contain a news story, how do you identify the body text of that news story while ignoring all other text?" We have a decent solution using an approach based on HTML density and statistical frequency of a variety of other custom-defined factors. We have built a substantial testing corpus of pages in which a human has specified the correct outcome. Building a better algorithm involves making changes to our algorithm and determining whether those changes generate a higher rate of match without increasing false positives or false negatives. We are also interested in abandoning (or supplementing) our HTML density approach in favor of a neural network approach. It would be very exciting if such an approach would work, but we have not yet tried it.

This is another place in which the solution can be generalized to computer science applications in other domains.

Create Term List

We currently focus on extracting relevant terms from each story, as a way to determine semantic meaning. We primarily use the OpenCalais API. We currently only use the straight list of terms that Calais gives us, but in reality Calais has much more rich metadata about each term returned. There are a variety of ways we could make use of this data, and our tool would be much smarter if for example it knew whether a given term was a person, place, or event (in the simplest case).

Likewise, we want to experiment with alternative term extraction algorithms or engines. We have one simple vocab-matching algorithm, but there are quite a few other approaches that one could take. We also have not yet experimented with the Yahoo Term Extraction API, although it looks promising.

Another idea here is to develop clustering or dynamic topic identification algorithms based on more sophisticated analysis such as Statistically Improbably Phrases

Allow Rich Queries

We have terabytes of data and millions of archived stories. How can we construct queries that work efficiently on this data set and generate interesting and compelling results? For instance, we are currently experimenting with time-sequence analysis of different terms across different media sources (see, for instance, our experimental charts of coverage of the bailout. How would we go about visualizing some of these questions with the data we have? We currently use the Google Visualizations API to actually generate our charts.

The other idea in this area is to help us develop a rich API to allow others to access our data. This is a high priority for us, but we need help. If you worked with us in this area, you would have quite a bit of latitude to help us define the queries, language, etc.

Skills

The required skills vary by the idea, but our system is an MVC webapp based on the Catalyst perl framework. We also have many additional scripts -- mostly written in perl -- which perform various tasks. Our database is PostgreSQL, so if you're familiar with MySQL or any SQL variant you should be fine. You need not be a perl wizard to work on the project... we recognize that a smart geek can get up to speed on perl fairly quickly, or even write components in another language as long as they are modular and maintainable.