GROUP TWO: Difference between revisions

BerkmanSysop (talk | contribs) (UTurn to 1297036800) |

|||

| (140 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

Issues of online identity and reputation bankruptcy deserve to be called difficult problems: Eric Schmidt's comment in a Wall Street Journal interview that soon “every young person…will be entitled automatically to change his or her name on reaching adulthood in order to disown youthful hijinks stored on their friends’ social media sites” [http://online.wsj.com/article/SB10001424052748704901104575423294099527212.html] stirred a heated debate [http://www.concurringopinions.com/archives/2010/09/reputation-bankruptcy.html] (even though when interviewed for this project he asserted it was only a "joke"). The controversy raised in this area provided enough motivation for our team to take a closer look at the problem. | |||

We start by providing some evidence of the importance of online identity and reputation, with a particular focus on looking towards the future and impact of reputation on the internet to future generations. We then dive into the recent scholarship and other proposed solutions, organizing the field using Lessig's framework of Law, Code, Market and Norms [https://secure.wikimedia.org/wikipedia/en/wiki/Code_and_Other_Laws_of_Cyberspace] before presenting our proposed solution: a law, supported by code and norms, which we call the Minor's Online Identity Protection Act. We are aware of the potential implications and concerns and present some of them in the following section. | |||

Our solution will ultimately benefit both children and adults. We seek to provide better protection for children, when still minors, from any damaging content that either they or others post about them. Looking forward, we seek to protect adults from earlier "youthful indiscretions" or "hijinks" and other damaging records from when they were minors, analogous to juvenile record sealing and expungement. | |||

==Importance of Online Identity and Reputation== | ==Importance of Online Identity and Reputation== | ||

As the first generation to grow up entirely in the digital age reaches adulthood, what has already been identified as a difficult problem could have serious and damaging consequences. Already vast amounts of personal content have been digitally captured for perpetuity, and more content is added every day. As one New York Times article aptly observed, the Internet effectively takes away second chances so that "the worst thing you've done is often the first thing everyone knows about you." [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] Others have noted that "[t]he Internet erases inhibitions" which tends to make people, especially young people, more likely to act out or behave badly online. [http://www.nytimes.com/2010/12/05/us/05bully.html?hp] In addition, the viral dynamics of the Internet and search engines -- which are able to quickly rank information based in part on popularity -- may amplify reputation damage. Moreover, as major advances in facial-recognition technology continue to develop, there will be a stark challenge to "our expectation of anonymity in public." [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] | |||

Minors are particularly vulnerable to these advances because they are the least able to fully comprehend the ramifications of online behavior, and are unlikely to exercise the maturity and restraint that we expect of adults (even if such restraint is not always exercised in practice). Congress has already recognized the importance of protecting children in this regard by passing several pieces of legislation, the most relevant of which is the [http://www.coppa.org Children's Online Privacy Protection Act] (COPPA). One failing of COPPA, however, is that protection ends at age thirteen. While young children may be the least cognitively advanced, it is older children and adolescents who are more likely to have sensitive and damaging content posted about them on the internet (e.g. photos of the youth drinking or smoking with friends, or sexually charged material). More importantly, COPPA has proven ineffective even at shielding the age group it is designed to protect: studies show that as many as 37% of children aged 10-12 have Facebook accounts, and there are approximately 4.4 million Facebook users under age thirteen in the United States despite the fact that Facebook's policy is in compliance with COPPA standards. [http://internet-safety.yoursphere.com/2010/11/facebook-ignores-coppa-yoursphere-law-enforcement-team-member-speaks-out.html] | |||

The lack of effective protection for minors, coupled with the staggering numbers of minors who post private content on the Internet, begs for a solution. This youthful web-based content also raises serious implications for these people's lives going forward; a recent Microsoft study reports that 75% of US recruiters and human-resource professionals report that their companies require them to do online research about candidates, and many use a range of sites when scrutinizing applicants. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] While these reputational problems similarly exist for those who posted content as adults, the problem of online identity and reputation is particularly compelling in the case of minors. | |||

==The Recent Scholarship and Other Proposed Solutions== | ==The Recent Scholarship and Other Proposed Solutions== | ||

| Line 18: | Line 17: | ||

===Law-Based=== | ===Law-Based=== | ||

The primary existing law to protect children on the internet is the [http://www.coppa.org Children's Online Privacy Protection Act] (COPPA). The scope of COPPA is directed to the operators of websites and dictates: "If you operate a commercial Web site or an online service directed to children under 13 that collects personal information from children or if you operate a general audience Web site and have actual knowledge that you are collecting personal information from children, you must comply with the Children's Online Privacy Protection Act." This law, however, is quite narrow and does little in practice to protect children on the Internet. Children under the age of thirteen can simply lie about their dates of birth when creating accounts. As a result, there are few repercussions for content hosts and intermediaries who do not try to prevent even such blatant tactics, as long as they do not have actual knowledge. It should be noted though that the FTC has successfully pursued some financial penalties and settlements. [http://www.ftc.gov/opa/2003/02/hersheyfield.shtm] | |||

Congress has passed additional laws to protect children in the online realm, with differing results. One such law, the [http://www.fcc.gov/cgb/consumerfacts/cipa.html Children's Internet Protection Act] (CIPA) requires that schools and public libraries employ certain content filters to shield children from harmful or obscene content in order to receive federal funding. The constitutionality of this law was upheld by the Supreme Court in the ''United States v. American Library Association''. [http://www.law.cornell.edu/supct/html/02-361.ZS.html] On the other hand, the [http://www.copacommission.org/ Children's Online Protection Act] (COPA), which was designed to restrict children's access to any material deemed harmful to them on the internet, did not survive its legal challenges. In upholding a lower court's preliminary injunction, the Supreme Could found the law unlikely to be sufficiently narrowly tailored to pass First Amendment scrutiny. [http://www.law.cornell.edu/supct/html/03-218.ZS.html] | |||

In state law, there are some existing tort remedies for privacy violations, though not necessarily geared towards children. For example, in thirty-six states, there is already a recognized tort for "public disclosure of private fact." Essentially, this tort bars dissemination of non-newsworthy personal information that a reasonable person would find highly offensive. [http://www.citmedialaw.org/legal-guide/publication-private-facts] Some state laws are more specific. For example, criminal laws forbidding the publication of the names of rape victims (for a discussion in opposition to such laws see Eugene Volokh [http://www.law.ucla.edu/volokh/privacy.htm#SpeechOnMattersOfPrivateConcern]). Anupam Chander has argued in a forthcoming article, "Youthful Indiscretion in an Internet Age," that the tort for public disclosure of private fact should be strengthened. (forthcoming in "The Offensive Internet" 2011 [http://www.amazon.com/Offensive-Internet-Speech-Privacy-Reputation/dp/0674050894/ref=sr_1_1?s=books&ie=UTF8&qid=1290199043&sr=1-1]). | |||

Chander also recognizes two important legal hurdles to overcome in strengthening the public disclosure of private fact tort. The first is that the indiscretion at issue may be legitimately newsworthy. This raises serious First Amendment concerns. The second hurdle is that intermediaries are often protected from liability under the Communications Decency Act (CDA). Despite these obstacles, Chander persuasively argues that such protection is needed, particularly in the context of nude images, because the society's fascination with embarrassing content will not abate. Moreover, he observes that an individual's humiliation "does not turn on whether some activity is out of the ordinary or freakish," but rather that common behavior can still cause significant personal damage. | |||

Other legal proposals have also been put forward to curb the problems of online identity and reputation. One set of proposals is geared toward employers. For instance, Paul Ohm has supported a law which would bar employers from firing current employees or not hiring potential new employees based on their legal, off-duty conduct found on social networking profiles. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] [http://paulohm.com/] Germany is also considering a law, to protect people's privacy, that would ban employers from mining information on jobs from social networking sites such as Facebook. The law would potentially impose significant fines on employers who violate it. German government officials have noted, however, that the law could be difficult to enforce because violations would be difficult to prove. [http://www.reuters.com/article/idUS338070983320100826] | |||

: | |||

Meanwhile, Dan Solove has considered a legal proposal which would give individuals a right to sue Facebook friends for certain breaches of confidence that violate one's privacy settings. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html][http://docs.law.gwu.edu/facweb/dsolove/Future-of-Reputation/text.htm] He finds problematic the fact that the law provides no protection when others wrongfully spread your secrets. He also believes that the United States should adopt a regime which would better protect people from such transgressions. [http://www.boston.com/bostonglobe/ideas/articles/2009/02/15/time_for_a_muzzle/]. In some other countries, such as England, the law does provide for broader protection where friends and ex-lovers have breached a duty of confidentiality. [http://www.boston.com/bostonglobe/ideas/articles/2009/02/15/time_for_a_muzzle/] | |||

A more drastic idea, mentioned by Peter Taylor, would be to create a constitutional right to privacy or “oblivion” to allow for more anonymity. [http://blog.petertaylor.co.nz/2010/07/22/%E2%80%9Cconstitutional-right-to-oblivion-%E2%80%9D-the/] Less radical, but still significant in its own right, Cass Sunstein has proposed "a general right to demand retraction after a clear demonstration that a statement is both false and damaging." [http://www.amazon.com/Rumors-Falsehoods-Spread-Believe-Them/dp/0809094738][http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] This bears some resemblance to defamation and libel laws, but where those laws would normally only require that the speaker or publisher pay adequate reputation damages, Sunstein's approach is specifically geared towards the removal of the material. In fact, his proposal is largely based on the existing [http://www.copyright.gov/legislation/pl105-304.pdf Digital Millennium Copyright Act] (DMCA) notice-and-takedown system for unauthorized uses of copyrighted material. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] | |||

: | On the other hand, some have criticized legal solutions to these issues that would in any way restrict the free flow of information. Eugene Volokh [http://www.law.ucla.edu/volokh/privacy.htm#SpeechOnMattersOfPrivateConcern] has expressed concern about highly troubling "possible unintended consequences of various justifications for information privacy speech restrictions." [http://www.law.ucla.edu/volokh/privacy.htm#SpeechOnMattersOfPrivateConcern] Volokh observed that children, as Internet consumers, are not capable of making contracts and thus any assent on their part may be invalid. He did not, however, discuss the topic in any detail. | ||

===Code-Based=== | ===Code-Based=== | ||

Legal scholars have proposed several code-based solutions to the problem of online reputation. Jonathan Zittrain has discussed the possibility of expanding online rating systems, such as those on eBay, to cover behavior -- beyond the simple buying and selling of goods -- to potentially include more general and expansive ratings of people. The reputation system that Zittrain describes would allow users to declare reputation bankruptcy, akin to financial bankruptcy, in order "to de-emphasize if not entirely delete older information that has been generated about them by and through various systems." [http://futureoftheinternet.org/reputation-bankruptcy] | |||

Alternative code-based proposals would allow for the deletion of content stored online via expiration dates, in an attempt to graft the natural process of human forgetting onto the Internet. A major proponent of this theory is Victor Mayer-Schonberger, who wrote about such digital forgetting through expiration dates in his book “Delete: The Virtue of Forgetting in the Digital Age” [http://press.princeton.edu/titles/8981.html]. Similarly, University of Washington researchers have developed a new technology called "Vanish" which encrypts electronic messages to essentially self-destruct after a designated time period. [http://www.nytimes.com/2009/07/21/science/21crypto.html]. | |||

: | A less extreme code-based option would be a "soft paternalistic" approach proposed by Alessandro Acquisti, where individuals would be given a "privacy nudge" when sharing potentially sensitive information about themselves online. [http://www.heinz.cmu.edu/~acquisti/papers/acquisti-privacy-nudging.pdf] This nudge would be a built-in feature for social networking sites, for example, and could either give helpful privacy information to users when posting such content, or contain a privacy default for all such sensitive information that users must be manually switch on. Such a "privacy nudge" has been analogized to Gmail's "Mail Goggles," which is an optional paternalistic feature designed to prevent drunken users from sending email messages that they might later regret. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] | ||

: | Hal Abelson recently provided a useful framework to think about technology that could support information accountability [http://www.w3.org/2010/policy-ws/slides/09-Abelson-MIT.pdf]. In particular, the capability to allow users to "manipulate information via policy-aware interfaces that can enforce policies and/or signal non-compliant uses" is relevant, especially in the context of our proposal. | ||

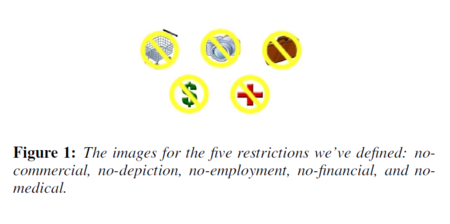

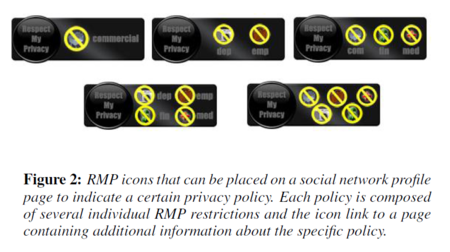

: | Ted Kang and Lalana Kagal, from MIT [http://www.csail.mit.edu/ CSAIL], have proposed a "Respect My Privacy (RMP) framework." [http://dig.csail.mit.edu/2010/Papers/Privacy2010/tkang-rmp/paper.pdf] Their framework generates privacy and usage control policies for social networks, such as Facebook, and visualizes these policies to users. Semantic Web technologies [https://secure.wikimedia.org/wikipedia/en/wiki/Semantic_Web] enable extensive functionality such as "dynamic definition, extension, and re-use of meta-data describing privacy policy, intended purpose or use of data." RMP currently offers five restrictions: no-commercial (similar to Creative Commons [http://creativecommons.org/] counterpart), no-depiction, no-employment, no-financial, and no-medical. | ||

: | [[Image:figure1_rmp.png|450px|center]] | ||

[[Image:figure2_rmp.png|450px|center]] | |||

===Market-Based=== | |||

Some believe that reputation problems on the Internet are best solved by allowing market forces to determine the outcome, uninhibited by other regulations. [http://www.pacificresearch.org/publications/id.290/pub_detail.asp] In the wake of concern over reputation online, a number of private companies have emerged to defend reputation. One such company is [http://www.reputationdefender.com/ Reputation Defender], created in 2006, to help "businesses and consumers control their online lives." [http://www.reputationdefender.com/] For a monthly or yearly fee, Reputation Defender claims it will protect a user's privacy, promote the user online, or suppress negative search results about the user. [http://www.reputationdefender.com/products] Companies like Reputation Defender do offer the average individual some measure of protection. However, there are several problems with such market solutions. The first problem is cost. Some features on Reputation Defender carry price tags as high as [http://www.reputationdefender.com/business $10,000], which is far outside the limits of what an ordinary individual can afford to spend sanitizing his or her search results. Even the less expensive services appear to cost between $5-10/month, which may be more than many individuals can afford, especially for long-term protection. Another serious issue with this type of market solution is effectiveness. It is unclear which exact tactics such companies use, but for the most part they can only offer short-term solutions -- which will face increasing challenges as advances in technology, such as facial-recognition technology, make it easier to find people online and harder to protect their identities. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] | |||

: | |||

===Norm-Based=== | |||

It is also possible that reputational issues could be solved by the development of norms. David Ardia, for example, has argued for a multi-faceted approach, where the focus would be on ensuring the reliability of reputational information rather than on imposing liability. Moreover, he advocates for the assistance of the community - including the online community - in resolving reputation disputes through enforcing societal norms. [http://papers.ssrn.com/sol3/papers.cfm?abstract_id=1689865] | |||

Norms could also be as simple as asking others "Please don't tweet this" when discussing sensitive or private topics, and expecting that norms will compel others to respect such requests. [http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html] As the public grows to better understand and become comfortable with technological advances, such norms could help to curb the problem of personal information being leaked online. | |||

Another norm-based solution would be to simply educate the public about reputational and privacy concerns in the context of the Internet. This has the potential to be especially effective with children and youth, who will be growing with advances in technology but may not understand the full repercussions of their actions. One example of a norm-based solution is the European Union's youth-focused education campaign, "Think before you post!" designed to "empower[] young people to manage their online identity in a responsible way." [http://europa.eu/rapid/pressReleasesAction.do?reference=SPEECH/10/22]. After years of pressure, a number of content intermediaries have voluntarily adopted self-regulatory initiatives with a goal to improve minor's safety on social networking sites in Europe. [http://europa.eu/rapid/pressReleasesAction.do?reference=SPEECH/10/22] Recent follow-up reports, however, have demonstrated that the success of the program has been less than resounding. Specifically, despite their promises to do so, a majority of the involved companies failed to implement some of the important changes, such as "to ensure the default setting for online profiles and contact lists is 'private' for users under 18." [http://ec.europa.eu/news/science/100209_1_en.htm] Moreover, after many companies added an avenue for youth to report harassment, apparently few of the companies ever respond to such complaints. [http://ec.europa.eu/news/science/100209_1_en.htm] Thus, while educational and norm-based proposals are a step in the right direction, they may lack sufficient force to bring about their desired changes. | |||

: | |||

The "Respect My Privacy (RMP)" proposal, mentioned above in the discussion of code-based solutions, also has norm-based features. The authors note that "an accountable system cannot be adequately implemented on social networks without assistance from the social network itself ([http://www.nytimes.com/2007/11/30/technology/30face.html] in [http://dig.csail.mit.edu/2010/Papers/Privacy2010/tkang-rmp/paper.pdf]). In other words, norms and best practices are required in addition to purely code-based solutions to provide a successful, comprehensive solution. | |||

: | |||

==Proposed Solution== | ==Proposed Solution== | ||

| Line 81: | Line 66: | ||

===Overview=== | ===Overview=== | ||

We are proposing the creation of a personal legal right to control content depicting or identifying oneself as a minor, limited by an objective standard, supported by best-practice code and norms for Content Intermediaries to annotate content depicting minors. The specific proposal and its scope are outlined below. | |||

'''Minor's Online Identity Protection Act (MOIPA):''' | |||

MOIPA will take the form of a notice-and-takedown system. The requester will have to demonstrate that his or her request falls within the scope of MOIPA. We are envisaging a wide adoption by the leading Content Intermediaries to watermark content depicting minors, as described further below. A digital watermark will serve as prima facie evidence that the content depicts or contains identifying information about minors. Absent a watermark or other metadata (or in the event of a challenge to a watermark), the requester will have to provide other evidence proving the age and identity of the minor depicted or identified in the content in question (via government-issued ID / notary notice). Standardized simple notice forms would be available to fill out and send online. | |||

: | |||

It is important to highlight that MOIPA requires affirmative action on the part of the individual. It would be used only in very limited instances where there is an individual who feels very strongly about the content ''and'' all the required criteria is met. Nothing would be removed or deleted automatically (cf. Juvenile record sealing and expungement). | |||

'''Supporting Code and Norms:''' | |||

:: | MOIPA goes hand in hand with a set of best-practice code and norms which will help to simplify the notice-and-takedown process. Recent advances in technology, particularly semantic web technologies, make it possible to digitally tag and augment all forms of content, even text, to provide additional information -- such as identity information of the Individual Depicted, the Content Creator as well as the Content Sharer in addition to date/time information relevant and available. One of the solutions presented in the section above, RMP by Ted Kang and Lalana Kagal [http://dig.csail.mit.edu/2010/Papers/Privacy2010/tkang-rmp/paper.pdf], was based on semantic technologies, in particular a custom-made ontology, to annotate content. As in their proposal, we believe a mix of code and norms is necessary to complement MOIPA to make it successful. | ||

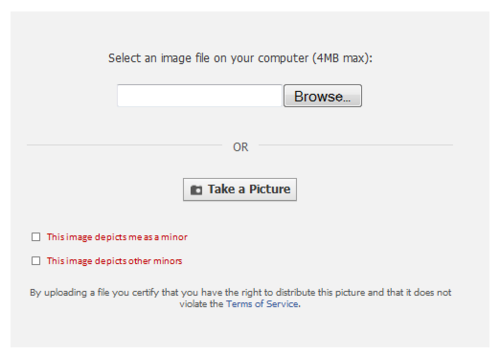

The content could either be automatically tagged to accounts identified as belonging to minors or require an affirmative check box (or both) if the content depicts or identifies minors (similar to “I have accepted the terms of service” or “I have the right to post this content” boxes) | |||

[[Image:Facebook_Example.PNG|500px|center]] | |||

Ideally, every piece of relevant content submitted to a Content Intermediary would have such annotations; this is particularly important in the context of the role of Search Engines, as explained below. | |||

'''Objective Limitation:''' | |||

MOIPA would also contain an objective limitation to make sure that only reasonable and legitimate requests are honored as well as to limit any potential abuses of the law. The limitation would also help ensure that MOIPA would pass First Amendment scrutiny if challenged. The limitation would be similar to that seen in many torts, including the public disclosure of private fact tort, which provides a cause of action where (among other elements) the disclosure would be “offensive to a reasonable person." [http://www.citmedialaw.org/legal-guide/publication-private-facts] | |||

It is important, however, that the law remains relatively simple to implement and does not produce many prolonged legal battles that would waste time and resources. Instead, simple or streamlined ways of enforcing the objective limitation would be preferable. One potential option for the objective limitation that would also co-opt technology would be to crowd-source the determination to a number others (possibly a "jury" of 12) for their opinion on whether the content is objectively embarrassing, unfavorable, or offensive. More broadly, these outsiders could make a determination simply of whether it is objectively "reasonable" for the individual to request that the identifying or depicting content be taken down. A simple majority finding that the content is sufficiently embarrassing or offensive could suffice. Such participants would not be able to download the content and would be subject to an agreement not to share or disclose the content themselves. | |||

===Involved Parties=== | |||

We have identified the following parties to be of importance when discussing our proposed solution. | |||

*'''Individual Identified or Depicted''' | |||

The Individual Identified or Depicted is at the center of our proposal and our attention. MOIPA is a personal right designed to be available to an individual with respect to conflicting content about him or her as a minor. | |||

*'''Content Creator''' | |||

The Content Creator is the person who creates content i.e. takes a picture and typically has the copyright over the content. | |||

*'''Content Sharer''' | |||

The Content Sharer is a person who uploads content to Content Intermediaries. In most cases, Content Sharer and Content Creator will be the same person, but not always. | |||

*'''Content Intermediaries''' | |||

Content Intermediaries span personal blogging platforms such as Wordpress or Blogger as well as social networking sites such as Facebook and MySpace. | |||

Facebook is already using semantic technologies (without going into too much detail, Facebook has been using RDFa, a semantic web mark-up language to provide additional meta-data about its content [http://www.readwriteweb.com/archives/facebook_the_semantic_web.php]) and could easily expand the provided meta-data to cover privacy/minor information as suggested above. The same is true for popular blogging services such as Wordpress or Blogger. | |||

*'''Search Engines''' | |||

Search Engines, such as Google or Microsoft Bing, could play an important part in the successful implementation of our proposal. Google already uses RDFa to augment search results (for more information see, for example [http://www.slideshare.net/mark.birbeck/rdfa-what-happens-when-pages-get-smart]). Similarly, Google could implement a MOIPA best practice directive which would, for example, exclude certain content related to minors, annotated in a particular way. Search engines, because of their vast power over what content people actually find and see on the Internet, are an ideal target for a law like MOIPA. Even a focus solely on search engines, although not our current proposal, is a possible alternative to be considered in the future, especially since it might be easier to implement than the suggested Notice-and-takedown system. | |||

''Note: some of these parties could be the same person in a given scenario'' | |||

===Scope=== | ===Scope=== | ||

In our opinion, the scope of MOIPA has to cover all pieces of content that depict or identify minors. In order to satisfy First Amendment concerns, minors who are of "legitimate public interest" (i.e. celebrities, performers/actors, and possibly children of famous public figures) will be excluded from the scope of our proposal. | |||

'''Identity-related content:''' | |||

Within the scope of our proposal, identity-related content can take various formats, ranging from pictures, to videos, to text. | |||

While a general discussion of Star Wars Kid [https://secure.wikimedia.org/wikipedia/en/wiki/Star_Wars_Kid] is not necessarily harmful to the boy, content that links Star Wars Kid's real name to the footage is potentially harmful to him and moreover adds very little public value. While this content should not be removed or deleted automatically, he should have the right to have the identifying information removed. | |||

: | '''Minors:''' | ||

In our opinion, minors present a particularly compelling case for MOIPA. The age of 18 is a cutoff already recognized by the government and drawn in numerous instances. As a reference point, one could refer to the various provisions to wipe juvenile criminal records (e.g. expungement) [http://criminal.findlaw.com/crimes/expungement/expungement-state-info.html]. | |||

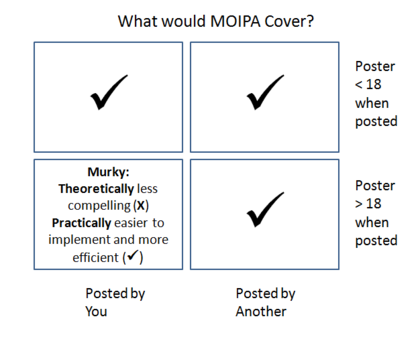

With respect to the age barrier, four scenarios are possible, as illustrated in the chart below. | |||

(1) Content about oneself and at the time of posting still a minor (younger than 18) | |||

(2) Content about another minor and at the time of posting the poster is a minor (younger than 18) | |||

(3) Content about a minor and poster is an adult at time of posting | |||

(4) Content about oneself and at the time of posting the poster is an adult | |||

[[Image:moipa2.PNG|420px|center|alt MOIPA Scope]] | |||

While our proposal clearly covers scenarios (1), (2), and (3), we believe (4) deserves further discussion. While it might be theoretically less compelling, it is practically easier to implement and more efficient, in our opinion. | |||

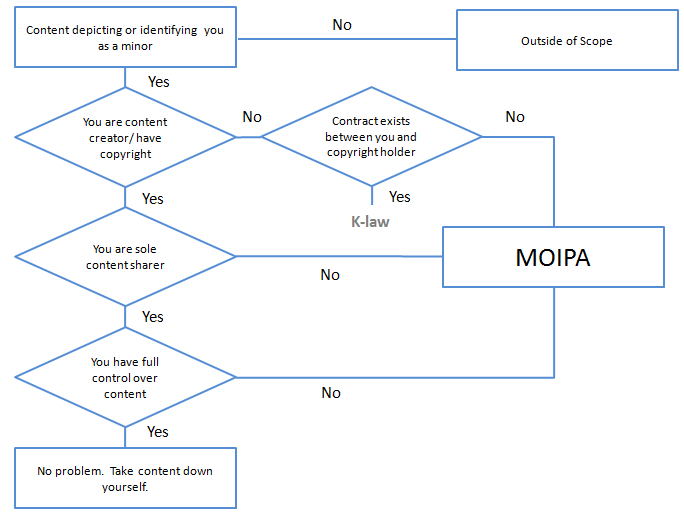

There are certain other scenarios when MOIPA would not be available; for example, due to contracts that were entered into over the content in question. The chart below provides a framework for what is and what is not covered under MOIPA. The chart is designed from the view of a person looking for ways to remove content about him- or herself. | |||

[[Image:moipa1.PNG|center|alt MOIPA Scope]] | |||

===Is a Legal Measure Necessary?=== | ===Is a Legal Measure Necessary?=== | ||

| Line 129: | Line 156: | ||

It is possible that the burgeoning problems of online identity and reputation will dissipate on their own in a natural fashion. Thus, it might not ultimately be necessary to respond with a law or other significant change, so we do not recommend the immediate adoption of our proposal. Instead, it may be worthwhile to observe the trends in hopes that less drastic yet effective solutions appear. | It is possible that the burgeoning problems of online identity and reputation will dissipate on their own in a natural fashion. Thus, it might not ultimately be necessary to respond with a law or other significant change, so we do not recommend the immediate adoption of our proposal. Instead, it may be worthwhile to observe the trends in hopes that less drastic yet effective solutions appear. | ||

For example, some believe that society will adapt to the ramifications of the | For example, some believe that society will adapt to the ramifications of the Internet and either adjust its (online) behavior accordingly, or such transgressions will become so commonplace that any negative impact will be lost. Much of the information available now, however, suggests that will not be the case. Since the birth of the Web in the early 1990s, the Internet has rapidly become indispensable to a broad cross-section of society -- yet we observe few behavioral changes to protect personal content. If anything, users of all ages have grown more willing than ever to share their private information, personal photos, and more. Yet our sensibilities to social and cultural "transgressions" have remained largely unchanged. Employers continue to conduct Internet and social network research on prospective (and current) employees, and then make hiring and firing decisions based on that information. The public has not grown to accept these circumstances as normal: embarrassing situations continue to be embarrassing and can carry severe consequences, seemingly no matter how universal they are or how many times similar conduct occurs (see, e.g. Chander’s discussion on whether “society will become inured to the problem” [http://www.amazon.com/Offensive-Internet-Speech-Privacy-Reputation/dp/0674050894/ref=sr_1_1?s=books&ie=UTF8&qid=1290199043&sr=1-1]). Ideally, society would evolve and adapt to the changes naturally, but so far in this area there has been no significant change. Moreover, children and adolescents are probably the least likely to pick up on these social cues and to understand the full ramifications of their online behavior. Our primarily legal-based solution is thus a possible avenue for government to protect the online identity and reputation of minors absent any other meaningful changes. | ||

Before adopting such a law, which carries some significant ramifications of its own, some time should be taken to see if other solutions will appear. But at the current time such solutions seem unlikely. For example, at present there is no real pressure on content-hosting platforms or search engines to adopt any code-based or norm-based solutions, and there is no reason to suspect that they will spend time, money, and other resources to voluntarily adopt any protective measures that are not required. To the extent that companies such as Reputation Defender exist to solve these problems, they are problematic in that they are (i) limited to only those who can afford them, and (ii) cannot offer full protection because they lack any real power or authority over the sources. Thus, absent any unexpected and meaningful changes, the MOIPA proposal is an effort to adequately protect the most vulnerable segment of the population. | Before adopting such a law, which carries some significant ramifications of its own, some time should be taken to see if other solutions will appear. But at the current time such solutions seem unlikely. For example, at present there is no real pressure on content-hosting platforms or search engines to adopt any code-based or norm-based solutions, and there is no reason to suspect that they will spend time, money, and other resources to voluntarily adopt any protective measures that are not required (notably, even with the pressure from the European Union's efforts to protect youth's privacy online, more than half of the companies involved in that effort failed to follow through on their promises [http://ec.europa.eu/news/science/100209_1_en.htm]). To the extent that companies such as Reputation Defender exist to solve these problems, they are problematic in that they are (i) limited to only those who can afford them, and (ii) cannot offer full protection because they lack any real power or authority over the sources. Thus, absent any unexpected and meaningful changes, the MOIPA proposal is an effort to adequately protect the most vulnerable segment of the population and ensure that youthful mistakes do not forever haunt an individual. | ||

==Implications and Potential Concerns== | ==Implications and Potential Concerns== | ||

===First Amendment | ===The First Amendment=== | ||

: | Any law which restricts speech or expression raises important First Amendment concerns. In relevant part, the [http://topics.law.cornell.edu/constitution/billofrights#amendmenti First Amendment] reads: “Congress shall make no law… abridging the freedom of speech.” [http://topics.law.cornell.edu/constitution/billofrights#amendmenti] This prohibition has been interpreted to allow restrictions on various narrow and defined categories of speech [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0315_0568_ZS.html] (e.g. [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0413_0049_ZS.html obscenity]; [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0315_0568_ZS.html fighting words]; [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0458_0747_ZS.html child pornography]). MOIPA does raise certain First Amendment concerns, but its objective limitation and exception for minors of legitimate public interest, coupled with the state’s compelling interest in protecting the well-being of minors, should be sufficient for the law to survive constitutional challenge. | ||

: | |||

The Supreme Court has long recognized the well-being of minors as a legitimate and even compelling state interest, permitting legislation in this realm even where constitutional rights (including First Amendment rights) are implicated. For example, in ''Globe Newspaper v. Superior Court for Norfolk County'', the Court expressly acknowledged that the interest in “safeguarding the physical and psychological well-being of a minor is a compelling one.” [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0457_0596_ZS.html] Moreover, in the context of regulation of child pornography, the Court observed: “we have sustained legislation aimed at protecting the physical and emotional well-being of youth even when the laws have operated in the sensitive area of constitutionally protected rights.” [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0458_0747_ZS.html] | |||

The Supreme Court has also required that such regulations on speech and other constitutional rights be narrowly tailored to the interest such that they are not unnecessarily broad or restrictive. [http://www.law.cornell.edu/supct/html/historics/USSC_CR_0457_0596_ZS.html] In excepting content on celebrity minors or other minors that fit into the category of legitimately newsworthy, we have aimed to tailor MOIPA such that it is not overly broad. In addition, the objective limitation – resembling many state public disclosure of private fact torts – further tailors MOIPA so it is no more broad than necessary to shield minors in instances deserving protection. The objective limitation also curbs potential abuses of the law. Otherwise, MOIPA could be vulnerable to abuse as a legal lever for personal grievances. For instance, to the extent that violating MOIPA may be grounds for a civil lawsuit, one could imagine a scenario where a minor girlfriend breaks up with a boyfriend, and then threatens a lawsuit if the ex-boyfriend does not scrub all records of her from his social networking sites. Likewise, if content intermediaries shared liability, lawsuits against Facebook could become commonplace. The objective limitation can thus also serve to limit the ability of the individual from seeking judicial remedies and avoid any potential undue burden on the justice system. | |||

Furthermore, it is important to note that the scope of MOIPA only covers the identifying or depicting information or content. Only that content which specifically identifies or depicts an individual would have to be removed (e.g. a person must only redact the minor's name from a discussion, while the rest of the discussion would be outside the reach of the law; one can blur, crop or cut the minor's image from a photo or video). Thus, any content not related specifically to the minor's identity would remain untouched by MOIPA. This narrowed focus protects the identity of the minor while allowing important First Amendment discussion and expression to continue. | |||

=== | ===Technical Limitations === | ||

In theory, the use of semantic web technologies to annotate content would be sufficient to provide the necessary identification. However, people might scrape content or repost pictures. A combination of both semantic web annotation technology and digital watermarks might therefore offer better protection. However, as with all technical solutions, loopholes will persist (for example, someone taking a picture with a camera of a computer screen and posting that picture). | |||

There is also a question to be raised whether the proposal would result in an abuse and/or overuse of the digital watermarks and metadata. We don't see any strong evidence for that, however, given the well-defined scope of the proposal and the fact that the watermark only creates a rebuttable presumption. Were this to become a serious or pervasive problem, it would also be possible to include a misuse clause whereby if someone repeatedly misused or abused the digital watermarks, they could lose their takedown rights under MOIPA. | |||

===Notice and Takedown=== | |||

The notice-and-takedown system should not require payment to utilize (unlike the DMCA) -- the right to control should be available to all. Otherwise, MOIPA could result in a social imbalance where the most well-off have the most "clean" records, or are more likely to engage in "youthful indiscretions" knowing they can make corrections later. The cost of cleansing a reputation is one of the primary concerns with market-based solutions, such as Reputation Defender. | |||

In general, takedown notices could be sent to whatever contact information is provided for a given website, content intermediary, or search engine. To properly implement an efficient and effective system, it might be prudent to require sites to list a MOIPA-designated agent (similar to a DMCA-designated agent; in fact, such an agent could serve both functions) for sending takedown notices. | |||

=== | ===Impact for Content Intermediaries=== | ||

MOIPA would have a significant impact on content intermediaries, such as search engines and social networking sites, who are significant aggregators of content posted by or about youths. A central question is who is responsible for what? Does an intermediary like Facebook have the same degree of liability as its third-party partners who create apps and make use of Facebook data? There will be advantages and disadvantages of MOIPA on content intermediaries, including: | |||

: | |||

'''Advantages:''' | |||

:*Public goodwill (parents might feel more comfortable with children using the site) | :*Public goodwill (parents might feel more comfortable with children using the site) | ||

:*Gain additional insights from enhanced user/content meta data | :*Gain additional insights from enhanced user/content meta data | ||

:*Potential new market for content management systems: i.e. search, track, and delete material across the Internet | :*Potential new market for content management systems: i.e. search, track, and delete material across the Internet | ||

'''Disadvantages:''' | |||

:*Penalties for violating MOIPA? | :*Penalties for violating MOIPA? | ||

:*Could databases and archives include references to deleted data? i.e. write "removed"? | :*Could databases and archives include references to deleted data? i.e. write "removed"? | ||

| Line 195: | Line 199: | ||

:*Differentiate liability of main intermediaries and third-party app makers? | :*Differentiate liability of main intermediaries and third-party app makers? | ||

As we consider the effects on intermediaries, meta-data tags for material about minors might have an unintended consequence: encouraging new marketplaces for such material. For example, it may be relatively easy to design an aggregator that could compile photographs of minors then sell this material to third parties for whatever purposes. One application would be a company that compiles photos, then contacts the featured people and requests payment for scrubbing the photos from the Internet (issuing and managing notice and takedown requests). A more ethically dubious version of this would be a service to spot embarrassing photos (perhaps a crowd-sourced GWAP-style game to enlist taggers of the most humiliating material) and request payment for scrubbing them. These situations are purely hypothetical, yet such unintended consequences should be factored into the design and implementation of MOIPA. By allowing for the personal legal right to be exercised without any fee (or costly judicial proceeding), and attempting to simplify the notice-and-takedown system to a brief online form, we have aimed to address these concerns, but still recognize that no system is without its imperfections. | |||

===Effects on Behavior of Minors=== | |||

To insure that MOIPA has a net social benefit, it will be important to educate minors about best practices for posting and removing content now and in years to come. One may assume that if minors have seen and heard about the reputational damage inflicted on those who post material with reckless abandon and are well-educated about the MOIPA response, they will be less prone to posting "risky" material -- especially about other minors -- out of concern that they may eventually need to remove it years later and could even be subject to legal action if they do not. Yet it's also possible that new protections afforded to minors' content may have the opposite, unintended consequence: lowering inhibitions and promoting even more risky behavior online. Given their ability to retract photos and text later, teenagers may assume they have a temporary "free pass" to post whatever they wish. Perhaps the deciding factor will be the eventual ease or difficulty of managing material about minors. Youths may be least inhibited if a commercial content management service (yet to be invented) allows posters to easily search dates and friends' names and remove hundreds of offending images with a few clicks. Absent such a service, minors should be educated about the needless effort that will be required of them if they share large volumes of risky or unfavorable content about themselves with the intention of tracking it down and trying to have it removed later. Moreover, the objective limitation is such that they are not guaranteed to have the material removed even if desired. Overall, many lay people (as with most laws) will probably be largely unaware of or disinterested in MOIPA unless they have serious reputational issues linked to content about them on the internet. Even among those who are fully aware of their rights under MOIPA, most will probably be unwilling to exert the effort to locate instances of such content and initiate a takedown, in part because much of the content about minors will not be so damaging as to induce them to utilize (or to qualify for) MOIPA's benefits. | |||

===Implications for Adults=== | |||

A challenging situation is raised by adults who post material about themselves from when they were minors. Should this material be protected? Theoretically, it's more difficult to justify protecting this material on moral grounds (as society holds adults more responsible than youths for their actions), but on a practical level it may be necessary to extend protections to any material about minors -- regardless of when it is posted. This is largely because content can get copied and reposted on many other sites so that it can be hard to follow the trail of content to find when, where and by whom it was originally posted. To the extent that discovering the original posting information of content could lead to lengthy or complicated factual investigations, it goes against the simplified aim of the notice-and-takedown system. | |||

Given the potential liabilities of posting material about minors, it's possible that this law would have a chilling effect: discouraging adults and institutions from posting any materials about minors. For example, school systems may be reluctant to post class photos -- out of concern that alumni may issue notice and take-down requests to remove themselves from group pictures, forcing the school to either remove or digitally alter the photos. For a single group picture, one can imagine a steady stream of such requests over years and decades, so that the school is forced to progressively remove faces until only a few children remain. Given hundreds of class photos, the task could become daunting. Alternately, it would be far easier (and less expensive) for the school to prohibit the posting of all photography, which could have a detrimental effect on the students and historical records. These potential concerns are contemplated by MOIPA's objection limitation, however, and it is highly unlikely that banal photographs such as class photos from school would warrant an actual takedown of the material. It is so unlikely that most people would probably recognize this fact and not send a notice in the first place. | |||

Another implication for adults is that parents and guardians might seek to invoke the law on behalf of their children. For example, in the school photo scenario above, one can imagine a mother who does not like the depiction of her 5-year-old son and is empowered to take action against school. Or, more broadly, an over-protective parent requests that photos from birthday parties be removed from a friend's Facebook site. Although it is hoped that before resorting to MOIPA parents would first simply ask the friend to remove the offending photo, as would probably occur absent the law. MOIPA is intended as a resort for those with a real reputational grievance; it is not intended to -- nor is it likely to -- replace social norms. Nevertheless, any negative impact of such a parental response is again tempered by the objective reasonableness limitation in the law. School photos and ordinary birthday party photos would be very unlikely to satisfy all of the takedown requirements. | |||

==Relevant Sources for Further Reading== | |||

*[http://www.nytimes.com/2010/07/25/magazine/25privacy-t2.html “The End of Forgetting” NY Times 7/25/10, Jeffrey Rosen] | |||

:*Discusses the implications associated with how the web takes away "second chances" such that "the worst thing you've done is often the first thing everyone knows about you." | |||

* | *[http://www.amazon.com/Offensive-Internet-Speech-Privacy-Reputation/dp/0674050894/ref=sr_1_1?s=books&ie=UTF8&qid=1290199043&sr=1-1 "Youthful Indiscretion in an Internet Age," Anupam Chander] | ||

:* | :*Supports a strengthening of the public disclosure tort while recognizing two principal legal hurdles: (1) the youth’s indiscretion may itself be of legitimate interest to the public (newsworthy) and (2) intermediaries can escape demands to withdraw info posted by others because of special statutory immunity. | ||

* | *[http://futureoftheinternet.org/reputation-bankruptcy "Reputation Bankruptcy" Blog Post, 9/7/2010, on The Future of the Internet and How to Stop it, Jonathan Zittrain] | ||

:*Suggests that intermediaries who demand an individual's identity on the internet "ought to consider making available a form of reputation bankruptcy." | |||

:* | |||

* | *[http://dig.csail.mit.edu/2010/Papers/Privacy2010/tkang-rmp/paper.pdf “Enabling Privacy-awareness in Social Networks,” Ted Kang and Lalana Kagal] | ||

:* | :*Proposes a “Respect My Privacy” framework focused on using code to display privacy preferences in order to make users more aware of the restrictions associated with shared data and relying largely on social norms for compliance. | ||

* | *[http://papers.ssrn.com/sol3/papers.cfm?abstract_id=1689865" Reputation in a Networked World," David S. Ardia] | ||

:*Argues that the focus should be on ensuring the reliability of reputational information rather than on imposing liability and advocates community governance. | |||

:* | |||

*" | *[http://www.law.ucla.edu/volokh/privacy.htm#SpeechOnMattersOfPrivateConcern "Freedom of Speech and Information Privacy: The Troubling Implications of a Right to Stop People From Speaking About You", Eugene Volokh] | ||

:* | :* Finds that "[t]he possible unintended consequences of various justifications for information privacy speech restrictions... are sufficiently troubling" enough to oppose them. | ||

Latest revision as of 06:46, 13 August 2020

Issues of online identity and reputation bankruptcy deserve to be called difficult problems: Eric Schmidt's comment in a Wall Street Journal interview that soon “every young person…will be entitled automatically to change his or her name on reaching adulthood in order to disown youthful hijinks stored on their friends’ social media sites” [1] stirred a heated debate [2] (even though when interviewed for this project he asserted it was only a "joke"). The controversy raised in this area provided enough motivation for our team to take a closer look at the problem.

We start by providing some evidence of the importance of online identity and reputation, with a particular focus on looking towards the future and impact of reputation on the internet to future generations. We then dive into the recent scholarship and other proposed solutions, organizing the field using Lessig's framework of Law, Code, Market and Norms [3] before presenting our proposed solution: a law, supported by code and norms, which we call the Minor's Online Identity Protection Act. We are aware of the potential implications and concerns and present some of them in the following section.

Our solution will ultimately benefit both children and adults. We seek to provide better protection for children, when still minors, from any damaging content that either they or others post about them. Looking forward, we seek to protect adults from earlier "youthful indiscretions" or "hijinks" and other damaging records from when they were minors, analogous to juvenile record sealing and expungement.

Importance of Online Identity and Reputation

As the first generation to grow up entirely in the digital age reaches adulthood, what has already been identified as a difficult problem could have serious and damaging consequences. Already vast amounts of personal content have been digitally captured for perpetuity, and more content is added every day. As one New York Times article aptly observed, the Internet effectively takes away second chances so that "the worst thing you've done is often the first thing everyone knows about you." [4] Others have noted that "[t]he Internet erases inhibitions" which tends to make people, especially young people, more likely to act out or behave badly online. [5] In addition, the viral dynamics of the Internet and search engines -- which are able to quickly rank information based in part on popularity -- may amplify reputation damage. Moreover, as major advances in facial-recognition technology continue to develop, there will be a stark challenge to "our expectation of anonymity in public." [6]

Minors are particularly vulnerable to these advances because they are the least able to fully comprehend the ramifications of online behavior, and are unlikely to exercise the maturity and restraint that we expect of adults (even if such restraint is not always exercised in practice). Congress has already recognized the importance of protecting children in this regard by passing several pieces of legislation, the most relevant of which is the Children's Online Privacy Protection Act (COPPA). One failing of COPPA, however, is that protection ends at age thirteen. While young children may be the least cognitively advanced, it is older children and adolescents who are more likely to have sensitive and damaging content posted about them on the internet (e.g. photos of the youth drinking or smoking with friends, or sexually charged material). More importantly, COPPA has proven ineffective even at shielding the age group it is designed to protect: studies show that as many as 37% of children aged 10-12 have Facebook accounts, and there are approximately 4.4 million Facebook users under age thirteen in the United States despite the fact that Facebook's policy is in compliance with COPPA standards. [7]

The lack of effective protection for minors, coupled with the staggering numbers of minors who post private content on the Internet, begs for a solution. This youthful web-based content also raises serious implications for these people's lives going forward; a recent Microsoft study reports that 75% of US recruiters and human-resource professionals report that their companies require them to do online research about candidates, and many use a range of sites when scrutinizing applicants. [8] While these reputational problems similarly exist for those who posted content as adults, the problem of online identity and reputation is particularly compelling in the case of minors.

The Recent Scholarship and Other Proposed Solutions

Law-Based

The primary existing law to protect children on the internet is the Children's Online Privacy Protection Act (COPPA). The scope of COPPA is directed to the operators of websites and dictates: "If you operate a commercial Web site or an online service directed to children under 13 that collects personal information from children or if you operate a general audience Web site and have actual knowledge that you are collecting personal information from children, you must comply with the Children's Online Privacy Protection Act." This law, however, is quite narrow and does little in practice to protect children on the Internet. Children under the age of thirteen can simply lie about their dates of birth when creating accounts. As a result, there are few repercussions for content hosts and intermediaries who do not try to prevent even such blatant tactics, as long as they do not have actual knowledge. It should be noted though that the FTC has successfully pursued some financial penalties and settlements. [9]

Congress has passed additional laws to protect children in the online realm, with differing results. One such law, the Children's Internet Protection Act (CIPA) requires that schools and public libraries employ certain content filters to shield children from harmful or obscene content in order to receive federal funding. The constitutionality of this law was upheld by the Supreme Court in the United States v. American Library Association. [10] On the other hand, the Children's Online Protection Act (COPA), which was designed to restrict children's access to any material deemed harmful to them on the internet, did not survive its legal challenges. In upholding a lower court's preliminary injunction, the Supreme Could found the law unlikely to be sufficiently narrowly tailored to pass First Amendment scrutiny. [11]

In state law, there are some existing tort remedies for privacy violations, though not necessarily geared towards children. For example, in thirty-six states, there is already a recognized tort for "public disclosure of private fact." Essentially, this tort bars dissemination of non-newsworthy personal information that a reasonable person would find highly offensive. [12] Some state laws are more specific. For example, criminal laws forbidding the publication of the names of rape victims (for a discussion in opposition to such laws see Eugene Volokh [13]). Anupam Chander has argued in a forthcoming article, "Youthful Indiscretion in an Internet Age," that the tort for public disclosure of private fact should be strengthened. (forthcoming in "The Offensive Internet" 2011 [14]).

Chander also recognizes two important legal hurdles to overcome in strengthening the public disclosure of private fact tort. The first is that the indiscretion at issue may be legitimately newsworthy. This raises serious First Amendment concerns. The second hurdle is that intermediaries are often protected from liability under the Communications Decency Act (CDA). Despite these obstacles, Chander persuasively argues that such protection is needed, particularly in the context of nude images, because the society's fascination with embarrassing content will not abate. Moreover, he observes that an individual's humiliation "does not turn on whether some activity is out of the ordinary or freakish," but rather that common behavior can still cause significant personal damage.

Other legal proposals have also been put forward to curb the problems of online identity and reputation. One set of proposals is geared toward employers. For instance, Paul Ohm has supported a law which would bar employers from firing current employees or not hiring potential new employees based on their legal, off-duty conduct found on social networking profiles. [15] [16] Germany is also considering a law, to protect people's privacy, that would ban employers from mining information on jobs from social networking sites such as Facebook. The law would potentially impose significant fines on employers who violate it. German government officials have noted, however, that the law could be difficult to enforce because violations would be difficult to prove. [17]

Meanwhile, Dan Solove has considered a legal proposal which would give individuals a right to sue Facebook friends for certain breaches of confidence that violate one's privacy settings. [18][19] He finds problematic the fact that the law provides no protection when others wrongfully spread your secrets. He also believes that the United States should adopt a regime which would better protect people from such transgressions. [20]. In some other countries, such as England, the law does provide for broader protection where friends and ex-lovers have breached a duty of confidentiality. [21]

A more drastic idea, mentioned by Peter Taylor, would be to create a constitutional right to privacy or “oblivion” to allow for more anonymity. [22] Less radical, but still significant in its own right, Cass Sunstein has proposed "a general right to demand retraction after a clear demonstration that a statement is both false and damaging." [23][24] This bears some resemblance to defamation and libel laws, but where those laws would normally only require that the speaker or publisher pay adequate reputation damages, Sunstein's approach is specifically geared towards the removal of the material. In fact, his proposal is largely based on the existing Digital Millennium Copyright Act (DMCA) notice-and-takedown system for unauthorized uses of copyrighted material. [25]

On the other hand, some have criticized legal solutions to these issues that would in any way restrict the free flow of information. Eugene Volokh [26] has expressed concern about highly troubling "possible unintended consequences of various justifications for information privacy speech restrictions." [27] Volokh observed that children, as Internet consumers, are not capable of making contracts and thus any assent on their part may be invalid. He did not, however, discuss the topic in any detail.

Code-Based

Legal scholars have proposed several code-based solutions to the problem of online reputation. Jonathan Zittrain has discussed the possibility of expanding online rating systems, such as those on eBay, to cover behavior -- beyond the simple buying and selling of goods -- to potentially include more general and expansive ratings of people. The reputation system that Zittrain describes would allow users to declare reputation bankruptcy, akin to financial bankruptcy, in order "to de-emphasize if not entirely delete older information that has been generated about them by and through various systems." [28]

Alternative code-based proposals would allow for the deletion of content stored online via expiration dates, in an attempt to graft the natural process of human forgetting onto the Internet. A major proponent of this theory is Victor Mayer-Schonberger, who wrote about such digital forgetting through expiration dates in his book “Delete: The Virtue of Forgetting in the Digital Age” [29]. Similarly, University of Washington researchers have developed a new technology called "Vanish" which encrypts electronic messages to essentially self-destruct after a designated time period. [30].

A less extreme code-based option would be a "soft paternalistic" approach proposed by Alessandro Acquisti, where individuals would be given a "privacy nudge" when sharing potentially sensitive information about themselves online. [31] This nudge would be a built-in feature for social networking sites, for example, and could either give helpful privacy information to users when posting such content, or contain a privacy default for all such sensitive information that users must be manually switch on. Such a "privacy nudge" has been analogized to Gmail's "Mail Goggles," which is an optional paternalistic feature designed to prevent drunken users from sending email messages that they might later regret. [32]

Hal Abelson recently provided a useful framework to think about technology that could support information accountability [33]. In particular, the capability to allow users to "manipulate information via policy-aware interfaces that can enforce policies and/or signal non-compliant uses" is relevant, especially in the context of our proposal.

Ted Kang and Lalana Kagal, from MIT CSAIL, have proposed a "Respect My Privacy (RMP) framework." [34] Their framework generates privacy and usage control policies for social networks, such as Facebook, and visualizes these policies to users. Semantic Web technologies [35] enable extensive functionality such as "dynamic definition, extension, and re-use of meta-data describing privacy policy, intended purpose or use of data." RMP currently offers five restrictions: no-commercial (similar to Creative Commons [36] counterpart), no-depiction, no-employment, no-financial, and no-medical.

Market-Based

Some believe that reputation problems on the Internet are best solved by allowing market forces to determine the outcome, uninhibited by other regulations. [37] In the wake of concern over reputation online, a number of private companies have emerged to defend reputation. One such company is Reputation Defender, created in 2006, to help "businesses and consumers control their online lives." [38] For a monthly or yearly fee, Reputation Defender claims it will protect a user's privacy, promote the user online, or suppress negative search results about the user. [39] Companies like Reputation Defender do offer the average individual some measure of protection. However, there are several problems with such market solutions. The first problem is cost. Some features on Reputation Defender carry price tags as high as $10,000, which is far outside the limits of what an ordinary individual can afford to spend sanitizing his or her search results. Even the less expensive services appear to cost between $5-10/month, which may be more than many individuals can afford, especially for long-term protection. Another serious issue with this type of market solution is effectiveness. It is unclear which exact tactics such companies use, but for the most part they can only offer short-term solutions -- which will face increasing challenges as advances in technology, such as facial-recognition technology, make it easier to find people online and harder to protect their identities. [40]

Norm-Based

It is also possible that reputational issues could be solved by the development of norms. David Ardia, for example, has argued for a multi-faceted approach, where the focus would be on ensuring the reliability of reputational information rather than on imposing liability. Moreover, he advocates for the assistance of the community - including the online community - in resolving reputation disputes through enforcing societal norms. [41]

Norms could also be as simple as asking others "Please don't tweet this" when discussing sensitive or private topics, and expecting that norms will compel others to respect such requests. [42] As the public grows to better understand and become comfortable with technological advances, such norms could help to curb the problem of personal information being leaked online.

Another norm-based solution would be to simply educate the public about reputational and privacy concerns in the context of the Internet. This has the potential to be especially effective with children and youth, who will be growing with advances in technology but may not understand the full repercussions of their actions. One example of a norm-based solution is the European Union's youth-focused education campaign, "Think before you post!" designed to "empower[] young people to manage their online identity in a responsible way." [43]. After years of pressure, a number of content intermediaries have voluntarily adopted self-regulatory initiatives with a goal to improve minor's safety on social networking sites in Europe. [44] Recent follow-up reports, however, have demonstrated that the success of the program has been less than resounding. Specifically, despite their promises to do so, a majority of the involved companies failed to implement some of the important changes, such as "to ensure the default setting for online profiles and contact lists is 'private' for users under 18." [45] Moreover, after many companies added an avenue for youth to report harassment, apparently few of the companies ever respond to such complaints. [46] Thus, while educational and norm-based proposals are a step in the right direction, they may lack sufficient force to bring about their desired changes.

The "Respect My Privacy (RMP)" proposal, mentioned above in the discussion of code-based solutions, also has norm-based features. The authors note that "an accountable system cannot be adequately implemented on social networks without assistance from the social network itself ([47] in [48]). In other words, norms and best practices are required in addition to purely code-based solutions to provide a successful, comprehensive solution.

Proposed Solution

Overview

We are proposing the creation of a personal legal right to control content depicting or identifying oneself as a minor, limited by an objective standard, supported by best-practice code and norms for Content Intermediaries to annotate content depicting minors. The specific proposal and its scope are outlined below.

Minor's Online Identity Protection Act (MOIPA):

MOIPA will take the form of a notice-and-takedown system. The requester will have to demonstrate that his or her request falls within the scope of MOIPA. We are envisaging a wide adoption by the leading Content Intermediaries to watermark content depicting minors, as described further below. A digital watermark will serve as prima facie evidence that the content depicts or contains identifying information about minors. Absent a watermark or other metadata (or in the event of a challenge to a watermark), the requester will have to provide other evidence proving the age and identity of the minor depicted or identified in the content in question (via government-issued ID / notary notice). Standardized simple notice forms would be available to fill out and send online.

It is important to highlight that MOIPA requires affirmative action on the part of the individual. It would be used only in very limited instances where there is an individual who feels very strongly about the content and all the required criteria is met. Nothing would be removed or deleted automatically (cf. Juvenile record sealing and expungement).

Supporting Code and Norms:

MOIPA goes hand in hand with a set of best-practice code and norms which will help to simplify the notice-and-takedown process. Recent advances in technology, particularly semantic web technologies, make it possible to digitally tag and augment all forms of content, even text, to provide additional information -- such as identity information of the Individual Depicted, the Content Creator as well as the Content Sharer in addition to date/time information relevant and available. One of the solutions presented in the section above, RMP by Ted Kang and Lalana Kagal [49], was based on semantic technologies, in particular a custom-made ontology, to annotate content. As in their proposal, we believe a mix of code and norms is necessary to complement MOIPA to make it successful.

The content could either be automatically tagged to accounts identified as belonging to minors or require an affirmative check box (or both) if the content depicts or identifies minors (similar to “I have accepted the terms of service” or “I have the right to post this content” boxes)

Ideally, every piece of relevant content submitted to a Content Intermediary would have such annotations; this is particularly important in the context of the role of Search Engines, as explained below.

Objective Limitation:

MOIPA would also contain an objective limitation to make sure that only reasonable and legitimate requests are honored as well as to limit any potential abuses of the law. The limitation would also help ensure that MOIPA would pass First Amendment scrutiny if challenged. The limitation would be similar to that seen in many torts, including the public disclosure of private fact tort, which provides a cause of action where (among other elements) the disclosure would be “offensive to a reasonable person." [50]

It is important, however, that the law remains relatively simple to implement and does not produce many prolonged legal battles that would waste time and resources. Instead, simple or streamlined ways of enforcing the objective limitation would be preferable. One potential option for the objective limitation that would also co-opt technology would be to crowd-source the determination to a number others (possibly a "jury" of 12) for their opinion on whether the content is objectively embarrassing, unfavorable, or offensive. More broadly, these outsiders could make a determination simply of whether it is objectively "reasonable" for the individual to request that the identifying or depicting content be taken down. A simple majority finding that the content is sufficiently embarrassing or offensive could suffice. Such participants would not be able to download the content and would be subject to an agreement not to share or disclose the content themselves.

Involved Parties

We have identified the following parties to be of importance when discussing our proposed solution.

- Individual Identified or Depicted

The Individual Identified or Depicted is at the center of our proposal and our attention. MOIPA is a personal right designed to be available to an individual with respect to conflicting content about him or her as a minor.

- Content Creator

The Content Creator is the person who creates content i.e. takes a picture and typically has the copyright over the content.

- Content Sharer

The Content Sharer is a person who uploads content to Content Intermediaries. In most cases, Content Sharer and Content Creator will be the same person, but not always.

- Content Intermediaries

Content Intermediaries span personal blogging platforms such as Wordpress or Blogger as well as social networking sites such as Facebook and MySpace. Facebook is already using semantic technologies (without going into too much detail, Facebook has been using RDFa, a semantic web mark-up language to provide additional meta-data about its content [51]) and could easily expand the provided meta-data to cover privacy/minor information as suggested above. The same is true for popular blogging services such as Wordpress or Blogger.

- Search Engines

Search Engines, such as Google or Microsoft Bing, could play an important part in the successful implementation of our proposal. Google already uses RDFa to augment search results (for more information see, for example [52]). Similarly, Google could implement a MOIPA best practice directive which would, for example, exclude certain content related to minors, annotated in a particular way. Search engines, because of their vast power over what content people actually find and see on the Internet, are an ideal target for a law like MOIPA. Even a focus solely on search engines, although not our current proposal, is a possible alternative to be considered in the future, especially since it might be easier to implement than the suggested Notice-and-takedown system.

Note: some of these parties could be the same person in a given scenario

Scope

In our opinion, the scope of MOIPA has to cover all pieces of content that depict or identify minors. In order to satisfy First Amendment concerns, minors who are of "legitimate public interest" (i.e. celebrities, performers/actors, and possibly children of famous public figures) will be excluded from the scope of our proposal.

Identity-related content:

Within the scope of our proposal, identity-related content can take various formats, ranging from pictures, to videos, to text.

While a general discussion of Star Wars Kid [53] is not necessarily harmful to the boy, content that links Star Wars Kid's real name to the footage is potentially harmful to him and moreover adds very little public value. While this content should not be removed or deleted automatically, he should have the right to have the identifying information removed.

Minors:

In our opinion, minors present a particularly compelling case for MOIPA. The age of 18 is a cutoff already recognized by the government and drawn in numerous instances. As a reference point, one could refer to the various provisions to wipe juvenile criminal records (e.g. expungement) [54].

With respect to the age barrier, four scenarios are possible, as illustrated in the chart below.

(1) Content about oneself and at the time of posting still a minor (younger than 18)

(2) Content about another minor and at the time of posting the poster is a minor (younger than 18)

(3) Content about a minor and poster is an adult at time of posting

(4) Content about oneself and at the time of posting the poster is an adult

While our proposal clearly covers scenarios (1), (2), and (3), we believe (4) deserves further discussion. While it might be theoretically less compelling, it is practically easier to implement and more efficient, in our opinion.