GROUP ONE: Difference between revisions

No edit summary |

BerkmanSysop (talk | contribs) (UTurn to 1297036800) |

||

| (94 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

The prominence of user generated content and the permanence of online memory has created a difficult problem that could threaten the futures of many people today.<ref> We would like to thank Viktor Mayer-Schönberger for all of his assistance with this project</ref> Social networks, blogs, and other websites have become incredibly popular locations to put pictures, videos, and text about an individual, his opinions, and his actions. Many of these items may not be considered objectionable at first, but they could become sources of embarrassment in the future.<ref>Alan Finder, ''For Some, Online Persona Undermines a Résumé'', The New York Times (June 11, 2006), http://www.nytimes.com/2006/06/11/us/11recruit.html?pagewanted=1&_r=1&sq=For%20Some,%20Online%20Persona%20Undermines%20a%20R%C3%A9sum%C3%A9&st=cse&scp=1.</ref> As of now, information on the Internet is long-lasting, if not permanent, and many individuals do not have the ability to remove media and information that depicts or references them. This permanence could lead to negative ramifications in a short period of time when an individual seeks a new job or relationship. Our project seeks to remedy this medium-term harm by establishing a process by which users can have information about themselves removed from "walled gardens" like social networks and blogs. We hope this will allow people to recover from mistakes, not be doomed by them. | |||

=Introduction= | =Introduction= | ||

Perhaps the seminal discussion on privacy is Samuel Warren and Louis Brandeis’ Harvard Law Review article [http://groups.csail.mit.edu/mac/classes/6.805/articles/privacy/Privacy_brand_warr2.html''The Right to Privacy''] from 1890. The authors wrote this article in response to the invention of new camera technology that allowed for instantaneous photographs. Warren and Brandeis feared that such technology would expose the private realm to the public, creating a situation in which “what is whispered in the closet shall be proclaimed from the house-tops.” Their article argued that a tort remedy should be available for those whose privacy had been violated in such a way. Warren and Brandeis's thesis exemplified a trend in legal thought that would achieve more prominence in the Internet Age: the need for normative, legal and governmental structures to protect private and undesirable information about people from reaching the public. | |||

The Internet has created a world of permanence, in which whispers in the closets are not only “proclaimed from the house-tops” but also never disappear. In Viktor Mayer-Schönberger’s ''Delete: The Virtue of Forgetting in the Digital Age,'' Mayer-Schönberger describes how the digital age changed the status quo of society, which had been “we remembered what we somehow perceived as important enough to expend that extra bit of effort on, and forgot most of the rest.” <ref>Viktor Mayer-Schönberger, ''Delete: The Virtue of Forgetting in the Digital Age'' 49 (2009)</ref>. Four technological advances (digitization of information, cheap storage, easy retrieval, and the global reach of the Internet) have made all memories permanent. <ref>''Id''. at 52.</ref> This change has forced humans to constantly deal with their past, preventing them from growing and moving on. | |||

The Internet has | The permanent past created by the Internet has increased the availability of information that can be used to judge people. Lior Jacob Strahilevitz discusses the effect of this flood of information on reputation and exclusion in his article [http://papers.ssrn.com/sol3/papers.cfm?abstract_id=1028875 ''Reputation Nation: Law in an Era of Ubiquitous Personal Information'']. The ability of a Google search or a social networking site to deliver personal information allows people to utilize online and real world reputation when making decisions in a way that was not feasible years ago. Strahilevitz discusses both the positive and negative ramifications of this information surplus, but other scholars have focused on its negative effect on offline lives. Daniel Solove in [http://docs.law.gwu.edu/facweb/dsolove/Future-of-Reputation/text.htm ''The Future of Reputation: Gossip, Rumor, and Privacy on the Internet''] describes many of these concerns. Solove relates many stories demonstrating the negative ramifications of online information, such a girl whose refusal to pick up her dog’s waste led to her picture becoming famous online. A commenter on a blog which discussed this picture stated “[r]ight or wrong, the [I]nternet is a cruel historian.” Solove states that the ability of information, especially that which is considered scandalous or interesting, to spread quickly and become permanent has distorted how we judge people. Many different types of people (family, friends, employers, etc.) can evaluate each other using personal information and gossip that many would consider to be unfair factors in such an evaluation. [http://www.jstor.org/stable/40041279 Solove] in his article ''A Taxonomy of Privacy'' notes that “disclosure can also be harmful because it makes a person a "prisoner of [her] recorded past." People grow and change, and disclosures of information from their past can inhibit their ability to reform their behavior, to have a second chance, or to alter their life's direction. Moreover, when information is released publicly, it can be used in a host of unforeseeable ways, creating problems related to those caused by secondary use. Solove refers to Lior Strahilevitz's observation that "disclosure involves spreading information beyond existing networks of information flow." According to Solove, the harm of disclosure is not so much the elimination of secrecy as it is the spreading of information beyond what user wished. One inappropriate Facebook picture or malicious blog post can haunt someone for the rest of his life. | ||

Today's young adults and teenagers are especially aware that they are growing up in a world where camera phones and constant access to the Internet create the potential for their lives to be displayed publicly. A May 2010 [http://www.pewinternet.org/Reports/2010/Reputation-Management.aspx Pew Internet and American Life] study suggests that privacy is highly valued by the next generation of social networking users. The report found that “Young adults, far from being indifferent about their digital footprints, are the most active online reputation managers in several dimensions. For example, more than two-thirds (71%) of social networking users ages 18-29 have changed the privacy settings on their profile to limit what they share with others online.” This generation is concerned about the effects of youthful indiscretion and do not want one mistake to limit their future prospects. | |||

A [http://ssrn.com/abstract=1589864 study] by scholars at the University of Berkeley Center for Law & Technology, Center for the Study of Law and Society, and the University of Pennsylvania - Annenberg School for Communication found privacy norms that are consistent with and provide some support for the privacy theory underlying our model. For instance: | |||

:Large proportions of all age groups have refused to provide information to a business for privacy reasons. They agree or agree strongly with the norm that a person should get permission before posting a photo of someone who is clearly recognizable to the internet, even if that photo was taken in public. They agree that there should be a law that gives people the right to know “everything that a website knows about them.” <ref> Chris Hoofnagle et al., ''How Different are Young Adults from Older Adults When it Comes to Information Privacy Attitudes and Policies?'' 11 (April 14, 2010). Available at SSRN: http://ssrn.com/abstract=1589864. </ref>. | |||

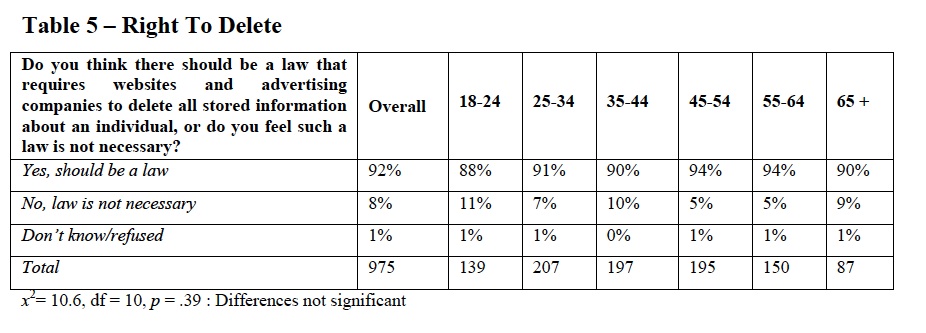

And a large majority of members of each age group agree that there should be a law that requires websites and advertising companies to delete “all stored information” about an individual.” <ref>''Id.''</ref>. The following is a chart from the study demonstrating the popularity of a deletion requirement: | |||

[[Image: Right to Delete.jpg]]<ref>''Id.''</ref> | |||

= | =Proposed Mid-term Content Management System= | ||

==Our Approach== | |||

[http:// | [http://cyber.law.harvard.edu/difficultproblems/GROUP_ONE#Literature_Review Many proposals regarding online reputation and information] share several characteristics: significant government intervention and a focus on dramatic short-term reputation harm. Regarding government intervention, many scholars devote a portion of their articles and books to discussing various legal problems with their proposals, namely First Amendment challenges. Eugene Volokh in his article [http://www.law.ucla.edu/volokh/privacy.htm#SpeechOnMattersOfPrivateConcern ''Freedom of Speech and Information Privacy: The Troubling Implications of a Right to Stop People From Speaking About You''] discusses how broad information privacy regulations would not be allowable under the First Amendment and how allowing such regulations could create negative legal and social ramifications. Many proposals that involve the government restricting speech would likely face significant First Amendment roadblocks. | ||

Often proposals focus on the highly objectionable material that would warrant liability or a complete erasing of one’s online reputation. Yet these approaches do not address the embarrassing yet legal and mundane content, such as a drunk picture or an inappropriate Facebook group on which an individual once posted. Such activities likely do not rise to the level warranting litigation or complete erasure of one's online identity, but they could negatively affect one’s professional and personal relationships. They are the equivalent of putting one's foot in one's mouth. | |||

Our project departs from these proposals both in its focus and implementation. We have divided online reputation into three different types: short-term, medium-term, and long-term. Short-term harms are exemplified by those involved with the [http://jezebel.com/5652114/college-girls-power-point-fuck-list-goes-viral-gallery Duke sex powerpoint scandal], in which an incendiary piece of information creates immediate and dramatic ramifications for one’s reputation. Long-term reputational content is something that has been built over many years, such as one’s [http://pages.ebay.com/services/forum/feedback.html eBay rating]. Medium-term reputational content is not necessarily immediately recognized as harmful, but its ramifications may come up when establishing a relationship or looking for a job. For example, a freshman in college may have a picture of himself drunk on Facebook. Such a picture may not be an issue now but could become one when he looks for a job in several years. Our proposal will address these medium-term issues. | |||

Our proposal relies on a combination of a market- and norms-based approaches. Jonathan Zittrain creates a classification system for such approaches to online issues in his article [http://law.fordham.edu/assets/LawReview/Zittrain_Vol_78_May.pdf ''The Fourth Quadrant'']. This system uses two measures: how “generative” the approach is and how “singular” it is. An undertaking that is created and operated by the entire community (not just by a smaller entity separated from the community) and is the only one of its kind utilized in the online realms falls into what Zittrain terms the “fourth quadrant.” Our system will be created and implemented by the social networks’ and blog’ community, both their operators and users. The government will not implement or enforce anything. Since our system is optional for websites, it initially will be one of several potential approaches dealing with the medium-term harms to reputation. However, our hope will be that its advantages and benefits (including its ability to attract users through promises of increased privacy) will allow it to become a popular system and, therefore, eventually become part of the fourth quadrant. | |||

We believe that a market and norms-based approach avoids the First Amendment issues, is more responsive to the needs of users and internet service providers, and is more likely to be implemented as a bottom up approach that can be tailored to meet the specific preferences of different demographics interfacing with different forms of online expression. | |||

==Assumptions== | |||

As discussed above, there are three distinct phases of reputation: short term, mid-term and long term. Our model is targeted solely at mid-term reputational issues. Short and long term issues will require other mechanisms and should be considered independently. | |||

When composing our model, we had four core assumptions: | |||

*1) All user-generated content should expire and cease to be publicly available after a set length of time, unless is it actively renewed. This theory follows from Viktor Mayer-Schönberger’s work. <ref> ''See'' ''Delete: The Virtue of Forgetting in the Digital Age''</ref> | |||

*2) The path of least resistance should result in the socially optimal outcome. This means that whenever a user follows default settings or acts in a lazy or apathetic fashion, the end result should be desirable. The model should make doing the “right” thing as easy as possible. | |||

*3) Direct, personal communications between real people lead to more cooperative outcomes. A user can empathize and be more receptive to an individualized appeal from another person than he would be to an automated message. Building these sorts of communications into the system fosters a social norm of cooperation and understanding. | |||

*4) The system should be content neutral so as to increase efficiency and avoid arbitrary implementation. If the system discriminated in how it treated content based on what type of content it was, then the process would get bogged down in the minutiae of moralistic review. | |||

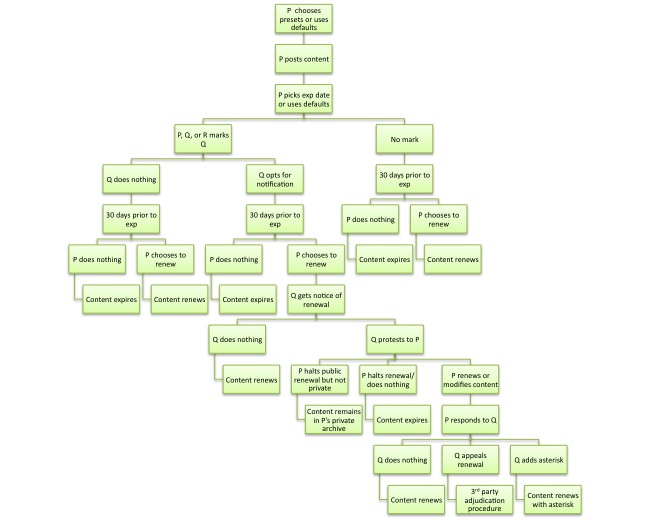

Our model will present a process by which content expires or renews, depending on how implicated parties intervene or abdicate. In the model, P is a user who initially posts content, Q is a user who is represented in the content, and R is a third-party bystander. | |||

The system would be applied by social media websites that choose to apply it. There is no feasible method of mandating its use, nor is there a reason to. These websites will want to implement this system or one similar to it of their own accord. Here’s why: | |||

*1) The system will make a website more desirable to users who value their privacy and reputations. | |||

*2) The system creates a stickiness that will keep users coming back to the social media website that uses it. | |||

*3) The system does not substantially impede a website's ability to profit from data mining, thus allowing it to promise greater privacy protections to its users without hurting the company’s source of revenue. | |||

Finally, it is important to note that the system is not designed to be 100% effective at addressing all potentially problematic content. The goal is solely to create a process that is easy to implement, easy to understand, and will achieve the socially optimal result in the vast majority of cases. | |||

==Model== | |||

[[Image:New Model.jpg]] | |||

*1) A user, P, is presented with preferences when he first uses the system. He can either use the presets chosen by the website or adjust the preferences to his taste. These preferences would determine his default actions for expirations, renewals, notifications, protests, etc. | |||

*2) P posts content on the website. | |||

*3) P chooses an expiration date for his content or uses his default expiration settings. | |||

*4) The content might contain a representation of Q. Q can then be “marked” on the content by P, Q or R. To be “marked” means that Q is alerted that he is implicated in this content and is given the option to be notified when the content comes up for renewal. | |||

*5) If no one is marked on the content, then P will, in accordance with his preferences, receive a notification 30 days prior to the content’s expiration date. P can then choose to renew the content or do nothing. If P does nothing, the content expires. | |||

*6) If Q is marked but never opts in for notification, then the content is treated as if no one is marked. | |||

*7) If Q is marked and opts in for notification, and if P chooses to renew the content, then Q will receive a notification that P chose to renew the content. | |||

*8) Q can ignore this notification and the content will renew as per P’s decision. | |||

*9) Alternatively, Q can “protest” P’s decision to renew the content. This means that Q would send a message to P saying that he doesn’t want the content renewed and explaining why. This protest would contain standard options that Q could select for his reasoning, but Q would also be required to compose a personalized message to P. | |||

*10) P, upon receiving Q’s protest, can choose to move forward with the renewal (with or without modifying the content), halt the public renewal, or do nothing. | |||

*11) If P does nothing, the content will expire, as per Q’s wishes. | |||

*12) If P halts the public renewal, the content will still be kept in P’s private archive but will not be viewable to any other users. | |||

*13) If P chooses to move forward with the renewal (with or without modifying the content), P must then send a response to Q explaining why P chose to renew the content over Q’s protest. This would also be a personalized message. | |||

*14) Upon receiving P’s response, Q might be satisfied with P’s reasons or modifications. If Q does nothing, the content will renew. | |||

*15) If Q is partially dissatisfied with P’s response, Q could chose to add an “asterisk” to the content. This would be a discrete message that viewers of the content would see, consisting of Q’s protest to P. The content would renew with the asterisk. | |||

*16) If Q is substantially dissatisfied with P’s response, Q could appeal the renewal to an adjudication process. This process would determine whether or not the content renews. | |||

==Explanation of the Model== | |||

== | |||

'''Users will set preferences or use default preferences for expiration and notification to streamline decisions.''' These will help triage content to prevent users from being deluged with notifications. | |||

*For example, presets could allow users to auto-ignore, auto-opt in to notify, or auto-protest based on the identity of the other party. Certain groups might get trusted automatically, while others would be automatically suspect. | |||

**E.g. If your mother marks you in a photo, you know that most of the time you probably don’t need to worry about its content. | |||

*However, because the purpose of the system is to expire valueless content, users would not be allowed to set their preferences to auto-renew their content. | |||

'''When P chooses an expiration date, P can opt to receive or not receive notice prior to expiration.''' Presumably, most content, being transitory, will be allowed to expire w/o notice (e.g. status posts, comments, tweets, etc). Thus, only truly substantive content would ever even require subsequent action by P or Q. | |||

'''Only an actual person depicted by the content would have “standing” to be marked.''' | |||

*This rule’s efficient application will compensate for its under-inclusivity. Trying to get 100% inclusivity for all affected users or content would make the perfect the enemy of the good. | |||

*The rule will also help prevent large groups from ganging up to harass one person. | |||

'''Captchas would be used to ensure that only real people will be able to mark content. <ref>Interview with Viktor Mayer-Schönberger (Nov. 17, 2010).</ref> ''' | |||

'''Q should not have the ability to prevent expiration.''' The content belongs to P; if he wants to expire it, that’s his right. | |||

'''R should not have the ability to prevent either expiration or renewal.''' Being neither the owner of the content (P) nor someone implicated in the content (Q), a third party should have no rights to dictate what happens or doesn’t happen with the content. | |||

'''Users could maintain a private archive of their content that will not expire, subject to their preferences.''' Many users use online social media websites as multimedia diaries of their lives. Users should have the option of permanently retaining all of their own content for private viewing. But they should not be able to keep such information publicly viewable without going through the system. | |||

*Our chief concern is controlling mid-term reputational effects. Thus, if something is private, it cannot substantively affect someone else’s reputation and does not need to be constrained. | |||

'''No one will be able to search for asterisks. <ref>''Id.''</ref> ''' Asterisks serve to explain content that Q finds objectionable. By their very nature, asterisks will be associated with controversial content. If a user could search for an asterisk then these asterisks would serve as homing beacons for the very content that Q wants to disappear in the first place. | |||

'''The adjudication process might be styled after either the Uniform Domain-Name Resolution Policy (“UDRP”) or eBay’s adjudication process. <ref>''Id.''</ref> ''' It is likely that this process would be contracted out to established 3rd parties. Allowing this service to be contracted out to the market will allow different methods to develop competitively to foster innovation and efficiency. | |||

*It should be noted that the adjudication process is the only part of the model that will take a substantive look at the nature of the content. | |||

==Concerns and Responses to the Model== | ==Concerns and Responses to the Model== | ||

| Line 117: | Line 103: | ||

'''1. How do we prevent a deluge of expiration notifications and other notifications from the process?''' | '''1. How do we prevent a deluge of expiration notifications and other notifications from the process?''' | ||

'''Concern''': The model is likely to create a considerable amount of email notification both for | '''Concern''': The model is likely to create a considerable amount of email notification both for P and for Q. Online social media users are generating content everyday so the flow will be constant. This flood of emails could discourage some walled gardens from implementing the model if they fear their users will abandon their network, because their mailbox is constantly flooded. Are users going to take concerns seriously when they receive so many of them over short periods of time? | ||

'''Response''': Like in every architectural design (both physical and technological), there are tradeoffs. The benefit of the model is that it facilitates interactions and keeps users constantly apprised about content they generate and content generated about them. The con is reflected in the benefit: they are constantly kept | '''Response''': Like in every architectural design (both physical and technological), there are tradeoffs. The benefit of the model is that it facilitates interactions and keeps users constantly apprised about content they generate and content generated about them. The con is reflected in the benefit: they are constantly being kept informed, which means they are constantly receiving notifications. Asides from the benefit of being aware, there are some tools users could employ to avoid mailbox flooding. | ||

Users could use their default settings to have content scheduled to | Users could use their default settings to have content scheduled to expire automatically, without notification. They could do this for all their content and select it as a default or they could manage pictures, status updates, posts, etc. individually. The more tailored their control over the system, the more easily users could manage their data and notifications. Content generators seeking to avoid notification from those marked to their content could also benefit from the use of default settings. Perhaps they could set their default settings such if someone would like the content taken down, it would automatically defer to that user. The walled garden settings could also permit individuals to differentiate by user or group. For example, a default setting could look something like: I do not want to be notified if Family and Good Friends object to content being renewed and I will defer to their requests, but I would like to be notified if Pete Campbell or someone from the High School group objects. | ||

'''2. Should there be a modified system for children? Should this system only exist for children? Should there only be one period of atonement for coming of age?''' | '''2. Should there be a modified system for children? Should this system only exist for children? Should there only be one period of atonement for coming of age?''' | ||

'''Concern''': Minors are arguably the most susceptible to generate content they later regret, is embarrassing, or could effect them in the future. They are also on the whole not as mature as the rest of the population and should be further protected from the permeability of content generated about them online. Similar to how the criminal records of minors are often expunged in the legal system, should the model guarantee minors greater protection or should the model only exist for minors? | '''Concern''': Minors are arguably the most susceptible to generate content they later regret, is embarrassing, or could effect them in the future. They are also, on the whole, not as mature as the rest of the population and should be further protected from the permeability of content generated about them online. Similar to how the criminal records of minors are often expunged in the legal system, should the model guarantee minors greater protection or should the model only exist for minors? | ||

'''Response''': Individual walled gardens are best situated to determine the individual needs of their users. While the authors of this model maintain that the model should apply universally, there is no doubt that minors are perhaps the most in need of the model. That said, individual sites that will adopt this model can best determine how they want to implement it and to what population of their users they would like to make it available to. | '''Response''': Individual walled gardens are best situated to determine the individual needs of their users. While the authors of this model maintain that the model should apply universally, there is no doubt that minors are perhaps the most in need of the model. That said, individual sites that will adopt this model can best determine how they want to implement it and to what population of their users they would like to make it available to. | ||

| Line 134: | Line 120: | ||

'''Concern''': One of the primary reasons individuals post content online is to share and preserve it in digital form. The model favors the deletion of information and could cause content that would have otherwise remained available for storing or sharing to be removed. What if the individuals generating the content or featured in the content want to preserve it for themselves, friends, or future generations? | '''Concern''': One of the primary reasons individuals post content online is to share and preserve it in digital form. The model favors the deletion of information and could cause content that would have otherwise remained available for storing or sharing to be removed. What if the individuals generating the content or featured in the content want to preserve it for themselves, friends, or future generations? | ||

'''Response''': There are | '''Response''': There are ways to preserve one’s content. Users under the model will be allowed to have a private archive. But other methods could be used as well. Expired content generated by individuals could be stored in another private mechanism: both online and offline. The most secure way to retain your personal data would be off a could and stored on a personal hard drive, but perhaps a user would like to have their content in a cloud. Either way, there are multiple devices currently where users can store data that is not publicly available. | ||

'''4. What happens if people circumvent the process and simply repost the material?''' | '''4. What happens if people circumvent the process and simply repost the material?''' | ||

| Line 148: | Line 134: | ||

'''Concern''': Individuals that do not want to be over-flooded with complaints or notifications might avoid posting content that is arguably beneficial to society, simply because they know some individuals might object and they do not want to deal with the burdensome process of responding to complaints. | '''Concern''': Individuals that do not want to be over-flooded with complaints or notifications might avoid posting content that is arguably beneficial to society, simply because they know some individuals might object and they do not want to deal with the burdensome process of responding to complaints. | ||

'''Response''': While the model in its current state might prevent content from being renewed, it certainly should not prevent individuals from originally posting the content. Individuals could set their default settings to avoid receiving notification of | '''Response''': While the model in its current state might prevent content from being renewed, it certainly should not prevent individuals from originally posting the content. Individuals could set their default settings to avoid receiving notification of marking. They would thus only encounter resistance when renewing the content. | ||

'''6. Who has standing to | '''6. Who has standing to mark themselves to content?''' | ||

'''Concern''': If there are no minimum requirements for standing, then content generators could be flooded by complaints and notifications form individuals that have no direct reputational stake in the content. Content that is political or otherwise controversial could be deluged with | '''Concern''': If there are no minimum requirements for standing, then content generators could be flooded by complaints and notifications form individuals that have no direct reputational stake in the content. Content that is political or otherwise controversial could be deluged with marks in order to get the content generator to remove. | ||

'''Response''': Standing should be limited to those individuals directly featured in the content. If it is a picture, then the individual must be in the picture. If it is text, then the individuals name should be mentioned in the text. Walled gardens should establish some mechanism for verifying that those | '''Response''': Standing should be limited to those individuals directly featured in the content. If it is a picture, then the individual must be in the picture. If it is text, then the individuals name should be mentioned in the text. Walled gardens should establish some mechanism for verifying that those marking themselves to content actually have the standing to do so. In walled gardens such as Facebook that might be easier to accomplish, but perhaps facial recognition software can be employed or some other mechanism that can verify who markers are and whether they have indeed have standing. Perhaps the process could be outsourced to mechanical turks? | ||

'''7. What about pictures that have social or even historical value, but | '''7. What about pictures that have social or even historical value, but the originator of the material either does not recognize such value or does not want to put forth the additional effort to preserve it?''' | ||

''' | '''Concern''': A model that favors the expiration and possible deletion of content online carries the risk of permanently deleting or creating greater access barriers to content that has social and historical value either currently or in the future. | ||

'''Response''': A model favoring the expiration and public deletion of user generated content could certainly result in the loss of some content that has social and historic value. But this is not a new problem. Famous authors often leave manuscripts unpublished upon their death and often leave clear instructions that such material should not be published. Famous artwork is often kept in the hands of private owners and is not made available for the public at large to see. | '''Response''': A model favoring the expiration and public deletion of user generated content could certainly result in the loss of some content that has social and historic value. But this is not a new problem. Famous authors often leave manuscripts unpublished upon their death and often leave clear instructions that such material should not be published. Famous artwork is often kept in the hands of private owners and is not made available for the public at large to see. | ||

| Line 164: | Line 150: | ||

It has always been the job news corporations and media to cover events of historical significance and obtain licensing or copyright release upon citizens who capture events of historical or social significance. It is arguably only in the last few years through the Internet that user generated content has been elevated to its current importance and presence in the public media. | It has always been the job news corporations and media to cover events of historical significance and obtain licensing or copyright release upon citizens who capture events of historical or social significance. It is arguably only in the last few years through the Internet that user generated content has been elevated to its current importance and presence in the public media. | ||

Furthermore, this model should not be applied to certain institutions. Traditional news media and even current semi-news media sites should not be subject to expiration dates. Archives of newspapers and important events and news should remain available indefinitely. | Furthermore, this model should not be applied to certain institutions.<ref>''Id.''</ref> Traditional news media and even current semi-news media sites should not be subject to expiration dates. Archives of newspapers and important events and news should remain available indefinitely. | ||

Moreover, this model does not cause the deletion of material. It simply might discourage its prolonged existence online. So while the costs of acquiring content of social and historic value might have increased, they are not insurmountable. | Moreover, this model does not cause the deletion of material. It simply might discourage its prolonged existence online. So while the costs of acquiring content of social and historic value might have increased, they are not insurmountable. | ||

Finally, it should be noted that this model is attempting only to address the medium-term issues. Problems of historical value are long-term issues that will likely need to be addressed and integrated into systems targeting those long-term issues. Ultimately the short-term, medium-term and long-term systems should be compatible and integrated, but we can only take one step at a time. | |||

'''8. Are we creating an environment that discourages responsibility?''' | '''8. Are we creating an environment that discourages responsibility?''' | ||

| Line 171: | Line 159: | ||

'''Concern''': If content we generated or that is generated about us is more easily purged from the public Internet, then people will be less accountable for their actions and perhaps even act more recklessly. Furthermore, they will continue to commit follies, because the consequences are not as permanent. People should take responsibility for their actions rather than rely on deletion. | '''Concern''': If content we generated or that is generated about us is more easily purged from the public Internet, then people will be less accountable for their actions and perhaps even act more recklessly. Furthermore, they will continue to commit follies, because the consequences are not as permanent. People should take responsibility for their actions rather than rely on deletion. | ||

'''Response''': The above is a matter of preference. It is true that in at least some cases bad actors will make poor choices because their misgivings are more forgiven under this model, but we would argue that a good majority of bad actors are not considering the consequences of their actions at all in the moment. Perhaps getting burned by the current system could influence their behavior in the future, but the reputational damage suffered by the individual might be steeper than the lesson learned. Furthermore, it is not as if the individual | '''Response''': The above is a matter of preference. It is true that in at least some cases bad actors will make poor choices because their misgivings are more forgiven under this model, but we would argue that a good majority of bad actors are not considering the consequences of their actions at all in the moment. Perhaps getting burned by the current system could influence their behavior in the future, but the reputational damage suffered by the individual might be steeper than the lesson learned. Furthermore, it is not as if the individual is being isolated from the worse immediate effects of his follies. It is only the medium-term effects that the model shields him partially from. | ||

'''9. What is the | '''9. What is the adjudication process? Should there even be an adjudication process?''' | ||

'''Concern''': If the content generated and the | '''Concern''': If the content generated and the marked individual does not come to a mutual agreement or solution, then what happens? Are we left back at square one? | ||

'''Response''': There are several things that could happen. First, nothing could happen. This could be a system based solely on the contact hypothesis and social psychology research that suggests that individuals are more likely to say yes to another’s individualized request for aid or help. In a large percentage of cases, the model resolves the problem without the need for some other mechanism than the mere facilitation of interaction between two human beings. | '''Response''': There are several things that could happen. First, nothing could happen. This could be a system based solely on the contact hypothesis and social psychology research that suggests that individuals are more likely to say yes to another’s individualized request for aid or help. In a large percentage of cases, the model resolves the problem without the need for some other mechanism than the mere facilitation of interaction between two human beings. | ||

Second, there could be a final arbitration or mediation facilitated by a third party. This third party could be in house or | Second, there could be a final arbitration or mediation facilitated by a third party. This third party could be in house or outsourced. A good example of outsourcing to a third party would be SquareTrade. SquareTrade conducted dispute resolution for eBay a few years back and was quite successful in doing so.<ref>''Id.''</ref> The benefits of using an outsourced third party would be that if something had to be taken down, walled gardens would potentially be able to continue asserting their Section 230 Communications Decency Act shield by not directly or editing content on their site. | ||

A third option would be for the walled garden to generate its own dispute resolution process. The site could do so by hiring a panel specifically assigned to do just that. The site could elect particular members of the site to vote (however, this might cause more unwanted attention to the content an individual necessarily wants eradicated). | |||

'''10. Should certain institutions be exempt from expiration dates?''' | '''10. Should certain institutions be exempt from expiration dates?''' | ||

| Line 186: | Line 175: | ||

'''Concern''': There is certain content on the net that society would be hurt if expired: like news media organizations and websites. | '''Concern''': There is certain content on the net that society would be hurt if expired: like news media organizations and websites. | ||

'''Response''': We only intend this model to be applied by walled gardens of social media and only on user generated content. | '''Response''': We only intend this model to be applied by walled gardens of social media and only on user generated content. | ||

=Literature Review= | |||

Many academics have proposed ways to deal with the flood of personal information and gossip in a way that mitigates their effects on everyday life. The ensuing categorizations represent the best fit, but many elements overlap. | |||

==Tort== | |||

[http://groups.csail.mit.edu/mac/classes/6.805/articles/privacy/Privacy_brand_warr2.html Warren and Brandeis’] seminal suggestion of a tort remedy for those whose privacy had been invaded has been seen as a potential solution to the issue of unwanted information on the Internet. | |||

[http://docs.law.gwu.edu/facweb/dsolove/Future-of-Reputation/text/futureofreputation-ch7.pdf Daniel Solove] argues that the law should expand its recognition of duties of privacy. This expansion would allow the law to see the placing of certain information online as a violation of privacy. Solove also proposes that only the initial entity who posts gossip online, not subsequent posters, should be liable. | |||

Solove also develops [http://www.jstor.org/stable/1373222 justifications for protections against the disclosure of private information] and claims that speech of private concern is less valuable than speech of public concern. He argues that the value of concealing one's past can, in many circumstances, outweigh the benefits of disclosure. Moreover, privacy protects against certain rational judgments that society may want to prohibit (such as employment decisions based on genetic information). | |||

Lior Jacob Strahilevitz proposes a [http://www.jstor.org/stable/4495516 revised standard of distinguishing between public and private facts in privacy torts] using insights gleaned from the empirical literature on social networks and information dissemination. He argues that judges can use this research to determine whether an individual had a reasonable expectation of privacy in a particular fact that he has shared with one or more person: if the plaintiff's previously private information would not have been widely disseminated but for the actions of the defendant, the individual had a reasonable expectation of privacy that tort law should recognize. | |||

==Norms== | |||

Drawing from Lawrence Lessig’s four modes of control, our model is based more heavily on norms. Based on the works of others, such as Robert Ellickson’s ''Order Without Law'',<ref>Robert C. Ellickson, ''Order Without Law: How Neighbors Settle Disputes'' (1994).</ref> we assume that in certain circumstances norms can be as powerful motivators as law in swaying people to act in socially desirable ways. Our model is a norms-based solution that relies on social psychology’s Contact Hypothesis. <ref>Gordon W. Allport, ''The Nature of Prejudice'' (1954).</ref> The contact hypothesis, originally based on racial attitudes,<ref>''Id.''</ref> postulates, that all other things being equal, contact creates a positive intergroup or individual encounter that solidifies bonds and motivates people to act more positively than they otherwise would have had they not been individually approached. For example, individuals are more likely to contribute financially to a political campaign if they are contacted personally by the candidate, than if they are mass mailed. Recent studies have also shown that individuals underestimate the willingness of strangers to aid them if they ask for help.<ref>Francis J. Flynn & Vanessa K.B. Lake, ''If You Need Help, Just Ask: Underestimating Compliance With Direct Requests for Help'', 95 J. of Personality and Social Psychology 128 (2008).</ref> One study found that: | |||

When people were asked to assume the role of the potential helper rather than the role of the help seeker, they gave higher estimates of others’ willingness to comply. In addition, people in the role of the potential helper gave relatively higher estimates of how discomforting (e.g., awkward, embarrassing) it would be to refuse to comply with a direct request for help compared with those in the help-seeker condition. This perceived-discomfort variable mediated the influence of assigned role on estimated compliance.<ref>''Id.'' at 135.</ref> | |||

The Contact Theory’s utility in cyberspace has also recently been explored.<ref>''See'' Y. Amichai-Hamburger & K. Y. A. McKenna, ''The Contact Hypothesis Re-Considered: Interacting Via the Internet'', 13 J. Computer-Mediated Comm. (2006).</ref> The model above seeks to benefit from this largely proven theory by requiring individual contact between parties. | |||

Other solutions to reputation management online have looked at social psychology theories to advance their propositions. Lior Strahilevitz’s ''How’s My Driving For Everyone and Everything'',<ref>Lior Strahilevitz, ''How’s My Driving for Everyone and Everything'', 81 N.Y.U.L. Rev. 1699 (2006).</ref> looks at a norms-approach way of shifting individuals from a loose and large social network to a smaller one that creates repeat players with reputations at stake with their interactions with strangers driving on the road. Similarly, this model seeks to personalize and humanize interactions among strangers or loose-knit community members to maximize the utility of community norms. In his article ''Social Norms from Close-Knit Groups to Loose-Knit Groups'', Strahilevitz explains that “Loose-knit environments give us an opportunity to study norms in contexts where a rational choice account of how norms arise and are enforced is implausible.”<ref>70 U. Chi. L. Rev. 359, 362 (2003).</ref> Relying on social norms, others have suggested that privacy contracts among online social network users that would express their privacy preferences would be followed, not only because of legal enforceability, but also because of norms respecting the wishes users have when sharing their personal information. <ref>Patricia Sanchez Abril, ''Private Ordering: A Contractual Approach to Online Interpersonal Privacy'', 45 Wake Forest L. Rev. 689, 707 (2010).</ref> | |||

James Grimmelmann in [http://works.bepress.com/cgi/viewcontent.cgi?article=1019&context=james_grimmelmann ''Saving Facebook''] stresses that any solution to privacy concerns on Facebook must comport with how users perceive their social environment. Solutions like restrictions on certain uses of social media or strong technical controls on who can see what information will not work because they do not align with the nuances of social relationships and the desire of young people to subvert attempts to control their behavior. Conversely, solutions that take into account what Facebook users’ expectation of privacy are and how they interact with their online friends will have a greater chance for success. | |||

[http://futureoftheinternet.org/reputation-bankruptcy Jonathan Zittrain] proposes the concept of “reputation bankruptcy”, in which an individual would be able to wipe out the entirety of one’s online reputation, both the good and bad aspects of it. The following is a quote from Zittrain's article in which he discusses his proposal: | |||

:Like personal financial bankruptcy, or the way in which a state often seals a juvenile criminal record and gives a child a “fresh start” as an adult, we ought to consider how to implement the idea of a second or third chance into our digital spaces. People ought to be able to express a choice to de-emphasize if not entirely delete older information that has been generated about them by and through various systems: political preferences, activities, youthful likes and dislikes. | |||

Zittrain suggests that the lack of selectivity would help prevent excessive use of this tool. Zittrain’s proposal could be compatible with all categories depending upon how he further defines it. | |||

Lauren Gelman in [http://papers.ssrn.com/sol3/papers.cfm?abstract_id=1520111 ''Privacy, Free Speech, and “Blurry-Edged” Online Networks''] argues for a norms-based approach to privacy online. She describes the online world as having “blurry edges” in the sense that information online that is intended for a select group of people can be accessed by the entire online community. She proposes a technological solution in which information that an individual posts online can include tags stating that individual’s preferences regarding privacy and how the information may be used. Gelman asserts that online users will likely accept this request if it is presented immediately upon users accessing the information, an assertion that is in line with the aforementioned Contract Theory. | |||

==Technological== | |||

Viktor Mayer-Schönberger’s solution in ''Delete'' shares Grimmelmann’s focus on user-expectations, as Mayer-Schönberger seeks to establish in the online world our natural expectation of forgetting. He proposes the application of expiration dates to information, whereby creator(s) set expiration dates for their data. Mayer-Schönberger allows for government involvement to determine when expiration dates could be changed or who should be involved in setting the date. Also, this system allows for no gradual decay or modification; expiration dates can only be extended by social mandate. <ref> ''Delete''. at 184-192.</ref> | |||

==Regulatory== | |||

Frank Pasquale in [http://papers.ssrn.com/sol3/papers.cfm?abstract_id=888327 ''Rankings, Reductionism, and Responsibility''] and in [http://www.law.umaryland.edu/academics/journals/jbtl/issues/3_1/3_1_061_Pasquale.pdf ''Asterisk Revisited: Debating a Right of Reply on Search Results''] proposes government regulation of search results that would give individuals a right of reply thorugh an asterisk placed next to a search result. The asterisk would lead to the individual in question’s own statement on the undesirable result. Our model includes a similar right of reply at the phase when the status of renewed data is contested; that is, when a content creator renews data that implicates one or more parties against their objection. The model allows the party to opt for an asterisk that can provide context for the objectionable image. | |||

The [http://www.telegraph.co.uk/technology/internet/8112702/EU-proposes-online-right-to-be-forgotten.html European Union] is considering an online “right to be forgotten.” Users will able to tell websites to remove all personal information the website has about them. If a website did not comply, it could face official punishment. On November 4th, 2010, the European Commission announced that it will propose new legislation in 2011 to strengthen EU data protection and unveiled a series of proposals as part of its overall strategy to protect personal data. Vice-President Viviane Reding, EU Commissioner for Justice, Fundamental Rights and Citizenship, called the protection of personal data a fundamental right. With respect to personal data online, the European Commission [http://redtape.msnbc.com/2010/11/eu-to-create-right-to-be-forgotten-online.html stated] that “[p]eople should be able to give their informed consent to the processing of their personal data . . . and should have the "right to be forgotten" when their data is no longer needed or they want their data to be deleted.” | |||

Cass Sunstein in his book ''On Rumors'' suggests the creation of a notice and take down procedure for falsehoods similar to the Digital Millennium Copyright Act. <ref>Cass Sunstein, ''On Rumors'' 78-79 (2009).</ref> Sunstein describes this as a “right” rather than a norm, demonstrating the need for government action. Also, this proposed notice and takedown system only deals with falsehoods, not with embarrassing information that is true. | |||

[http://volokh.com/posts/1176705254.shtml Orrin Kerr] suggests conditioning immunity for websites with objectionable under section 230 of the Communications Decency Act on whether the site owner prevented search engine bots from taking up its content. If a search engine did pick up the content, the website would be liable for the content the search engine displays. Under this suggestion, the objectionable content would still exist, it just could not be found through a search engine. | |||

==Market== | |||

[http://preibusch.de/publications/social_networks/privacy_jungle_dataset.htm Joseph Bonneau and Soren Prebusch] at the University of Cambridge conducted the first thorough analysis of the market for privacy practices and policies in online social networks. They conclude that “the market for privacy in social networks is dysfunctional in that there is significant variation in sites’ privacy controls, data collection requirements, and legal privacy policies, but this is not effectively conveyed to users.” They also find that “privacy is rarely used as a selling point, even then only as auxiliary, nondecisive feature.” Bonneau and Prebusch advocate “a privacy communication game, where the economically rational choice for a site operator is to make privacy control available to evade criticism from privacy fundamentalists, while hiding the privacy control interface and privacy policy to maximize sign-up numbers and encourage data sharing from the pragmatic majority of users.” Their proposal is in tension with our model insofar as it would make privacy policies even more inaccessible to users; our proposal seeks to market privacy as a selling point based on user-preferences and principles of reputation-management, while protecting the ability of site operators to use member data. | |||

Many articles discuss privacy and data management in the context of online commercial transactions. <ref> ''See, e.g.'' Mary J. Culnan and George R. Milne, ''Strategies for Reducing Online Privacy Risks: Why Consumers Read (or Don't Read) Online Privacy Notices'', 18 J. of Interactive Marketing 15, 18 (2004) (concluding that reading is related to concern for privacy, positive perceptions about notice comprehension, and higher levels of trust in the notice); Eve M. Caudill and Patrick E. Murphy, ''Consumer Online Privacy: Legal and Ethical Issues'', 19 J. of Pub. Pol'y & Marketing 7 (2000), available at http://www.jstor.org/stable/30000483; George R. Milne, ''Privacy and Ethical Issues in Database/Interactive Marketing and Public Policy: A Research Framework and Overview of the Special Issue'', 19 J. of Pub. Pol'y & Marketing 1 (2000).</ref> Viktor Mayer-Schönberger has also stressed that privacy could be a powerful selling point for social networks seeking to attract new users. <ref>Interview with Viktor Mayer-Schönberger (Nov. 17, 2010).</ref> Privacy's market appeal can be seen in [https://joindiaspora.com/ Diaspora's], a Facebook alternative that emphasizes user privacy and control of content, [http://techcrunch.com/2010/05/12/diaspora-open-facebook-project/ success in acquiring funding]. | |||

==Property== | |||

[http://www.jstor.org/stable/4093335 Paul M Schartz] has proposed a model for propertized personal information that would help fashion a market that would respect individual privacy and help maintain a democratic order. His proposal includes 5 elements: “limitations on an individual's right to alienate personal information; default rules that force disclosure of the terms of trade; a right of exit for participants in the market; the establishment of damages to deter market abuses; and institutions to police the personal information market and punish privacy violations.” | |||

==Other Research== | |||

A Stanford Law Review Symposium<ref>Stanford Law Review, Vol. 52, No. 5, Symposium: Cyberspace and Privacy: A New Legal Paradigm? (May, 2000)</ref> on cyberspace and privacy provides a number of instructive articles on legal and theoretical prescriptions for privacy problems on the internet. [http://www.jstor.org/stable/1229511 Pamela Samuelson] suggests that information privacy law needs to impose minimum standards of commercial morality on firms that process personal data, and proposes that default licensing rules of trade secrecy law can be adapted to protect personal information in cyberspace. [http://www.jstor.org/stable/1229519 Michael Froomkin] discusses leading attempts to craft legal responses to assaults on privacy, including self-regulation, privacy-enhancing technologies, data-protection law, and property-rights based solutions. [http://www.jstor.org/stable/1229515 Jessica Litman] explores property and common law solutions to protecting information property. [http://www.jstor.org/stable/1229517 Julie Cohen] argues that the debate about data privacy protection should be grounded in an appreciation of the conditions necessary for individuals to develop and exercise autonomy, and that meaningful autonomy requires a degree of freedom from monitoring, scrutiny, and categorization by others [http://www.jstor.org/stable/1229513 Jonathan Zittrain] discusses how “trusted systems” technologies might allow more thorough mass distribution of data, while allowing publishers to retain unprecedented control over their wares. | |||

=Notes= | =Notes= | ||

<references /> | <references /> | ||

Latest revision as of 05:46, 13 August 2020

The prominence of user generated content and the permanence of online memory has created a difficult problem that could threaten the futures of many people today.[1] Social networks, blogs, and other websites have become incredibly popular locations to put pictures, videos, and text about an individual, his opinions, and his actions. Many of these items may not be considered objectionable at first, but they could become sources of embarrassment in the future.[2] As of now, information on the Internet is long-lasting, if not permanent, and many individuals do not have the ability to remove media and information that depicts or references them. This permanence could lead to negative ramifications in a short period of time when an individual seeks a new job or relationship. Our project seeks to remedy this medium-term harm by establishing a process by which users can have information about themselves removed from "walled gardens" like social networks and blogs. We hope this will allow people to recover from mistakes, not be doomed by them.

Introduction

Perhaps the seminal discussion on privacy is Samuel Warren and Louis Brandeis’ Harvard Law Review article The Right to Privacy from 1890. The authors wrote this article in response to the invention of new camera technology that allowed for instantaneous photographs. Warren and Brandeis feared that such technology would expose the private realm to the public, creating a situation in which “what is whispered in the closet shall be proclaimed from the house-tops.” Their article argued that a tort remedy should be available for those whose privacy had been violated in such a way. Warren and Brandeis's thesis exemplified a trend in legal thought that would achieve more prominence in the Internet Age: the need for normative, legal and governmental structures to protect private and undesirable information about people from reaching the public.

The Internet has created a world of permanence, in which whispers in the closets are not only “proclaimed from the house-tops” but also never disappear. In Viktor Mayer-Schönberger’s Delete: The Virtue of Forgetting in the Digital Age, Mayer-Schönberger describes how the digital age changed the status quo of society, which had been “we remembered what we somehow perceived as important enough to expend that extra bit of effort on, and forgot most of the rest.” [3]. Four technological advances (digitization of information, cheap storage, easy retrieval, and the global reach of the Internet) have made all memories permanent. [4] This change has forced humans to constantly deal with their past, preventing them from growing and moving on.

The permanent past created by the Internet has increased the availability of information that can be used to judge people. Lior Jacob Strahilevitz discusses the effect of this flood of information on reputation and exclusion in his article Reputation Nation: Law in an Era of Ubiquitous Personal Information. The ability of a Google search or a social networking site to deliver personal information allows people to utilize online and real world reputation when making decisions in a way that was not feasible years ago. Strahilevitz discusses both the positive and negative ramifications of this information surplus, but other scholars have focused on its negative effect on offline lives. Daniel Solove in The Future of Reputation: Gossip, Rumor, and Privacy on the Internet describes many of these concerns. Solove relates many stories demonstrating the negative ramifications of online information, such a girl whose refusal to pick up her dog’s waste led to her picture becoming famous online. A commenter on a blog which discussed this picture stated “[r]ight or wrong, the [I]nternet is a cruel historian.” Solove states that the ability of information, especially that which is considered scandalous or interesting, to spread quickly and become permanent has distorted how we judge people. Many different types of people (family, friends, employers, etc.) can evaluate each other using personal information and gossip that many would consider to be unfair factors in such an evaluation. Solove in his article A Taxonomy of Privacy notes that “disclosure can also be harmful because it makes a person a "prisoner of [her] recorded past." People grow and change, and disclosures of information from their past can inhibit their ability to reform their behavior, to have a second chance, or to alter their life's direction. Moreover, when information is released publicly, it can be used in a host of unforeseeable ways, creating problems related to those caused by secondary use. Solove refers to Lior Strahilevitz's observation that "disclosure involves spreading information beyond existing networks of information flow." According to Solove, the harm of disclosure is not so much the elimination of secrecy as it is the spreading of information beyond what user wished. One inappropriate Facebook picture or malicious blog post can haunt someone for the rest of his life.

Today's young adults and teenagers are especially aware that they are growing up in a world where camera phones and constant access to the Internet create the potential for their lives to be displayed publicly. A May 2010 Pew Internet and American Life study suggests that privacy is highly valued by the next generation of social networking users. The report found that “Young adults, far from being indifferent about their digital footprints, are the most active online reputation managers in several dimensions. For example, more than two-thirds (71%) of social networking users ages 18-29 have changed the privacy settings on their profile to limit what they share with others online.” This generation is concerned about the effects of youthful indiscretion and do not want one mistake to limit their future prospects.

A study by scholars at the University of Berkeley Center for Law & Technology, Center for the Study of Law and Society, and the University of Pennsylvania - Annenberg School for Communication found privacy norms that are consistent with and provide some support for the privacy theory underlying our model. For instance:

- Large proportions of all age groups have refused to provide information to a business for privacy reasons. They agree or agree strongly with the norm that a person should get permission before posting a photo of someone who is clearly recognizable to the internet, even if that photo was taken in public. They agree that there should be a law that gives people the right to know “everything that a website knows about them.” [5].

And a large majority of members of each age group agree that there should be a law that requires websites and advertising companies to delete “all stored information” about an individual.” [6]. The following is a chart from the study demonstrating the popularity of a deletion requirement:

Proposed Mid-term Content Management System

Our Approach

Many proposals regarding online reputation and information share several characteristics: significant government intervention and a focus on dramatic short-term reputation harm. Regarding government intervention, many scholars devote a portion of their articles and books to discussing various legal problems with their proposals, namely First Amendment challenges. Eugene Volokh in his article Freedom of Speech and Information Privacy: The Troubling Implications of a Right to Stop People From Speaking About You discusses how broad information privacy regulations would not be allowable under the First Amendment and how allowing such regulations could create negative legal and social ramifications. Many proposals that involve the government restricting speech would likely face significant First Amendment roadblocks.

Often proposals focus on the highly objectionable material that would warrant liability or a complete erasing of one’s online reputation. Yet these approaches do not address the embarrassing yet legal and mundane content, such as a drunk picture or an inappropriate Facebook group on which an individual once posted. Such activities likely do not rise to the level warranting litigation or complete erasure of one's online identity, but they could negatively affect one’s professional and personal relationships. They are the equivalent of putting one's foot in one's mouth.

Our project departs from these proposals both in its focus and implementation. We have divided online reputation into three different types: short-term, medium-term, and long-term. Short-term harms are exemplified by those involved with the Duke sex powerpoint scandal, in which an incendiary piece of information creates immediate and dramatic ramifications for one’s reputation. Long-term reputational content is something that has been built over many years, such as one’s eBay rating. Medium-term reputational content is not necessarily immediately recognized as harmful, but its ramifications may come up when establishing a relationship or looking for a job. For example, a freshman in college may have a picture of himself drunk on Facebook. Such a picture may not be an issue now but could become one when he looks for a job in several years. Our proposal will address these medium-term issues.

Our proposal relies on a combination of a market- and norms-based approaches. Jonathan Zittrain creates a classification system for such approaches to online issues in his article The Fourth Quadrant. This system uses two measures: how “generative” the approach is and how “singular” it is. An undertaking that is created and operated by the entire community (not just by a smaller entity separated from the community) and is the only one of its kind utilized in the online realms falls into what Zittrain terms the “fourth quadrant.” Our system will be created and implemented by the social networks’ and blog’ community, both their operators and users. The government will not implement or enforce anything. Since our system is optional for websites, it initially will be one of several potential approaches dealing with the medium-term harms to reputation. However, our hope will be that its advantages and benefits (including its ability to attract users through promises of increased privacy) will allow it to become a popular system and, therefore, eventually become part of the fourth quadrant.

We believe that a market and norms-based approach avoids the First Amendment issues, is more responsive to the needs of users and internet service providers, and is more likely to be implemented as a bottom up approach that can be tailored to meet the specific preferences of different demographics interfacing with different forms of online expression.

Assumptions

As discussed above, there are three distinct phases of reputation: short term, mid-term and long term. Our model is targeted solely at mid-term reputational issues. Short and long term issues will require other mechanisms and should be considered independently.

When composing our model, we had four core assumptions:

- 1) All user-generated content should expire and cease to be publicly available after a set length of time, unless is it actively renewed. This theory follows from Viktor Mayer-Schönberger’s work. [8]

- 2) The path of least resistance should result in the socially optimal outcome. This means that whenever a user follows default settings or acts in a lazy or apathetic fashion, the end result should be desirable. The model should make doing the “right” thing as easy as possible.

- 3) Direct, personal communications between real people lead to more cooperative outcomes. A user can empathize and be more receptive to an individualized appeal from another person than he would be to an automated message. Building these sorts of communications into the system fosters a social norm of cooperation and understanding.

- 4) The system should be content neutral so as to increase efficiency and avoid arbitrary implementation. If the system discriminated in how it treated content based on what type of content it was, then the process would get bogged down in the minutiae of moralistic review.

Our model will present a process by which content expires or renews, depending on how implicated parties intervene or abdicate. In the model, P is a user who initially posts content, Q is a user who is represented in the content, and R is a third-party bystander.

The system would be applied by social media websites that choose to apply it. There is no feasible method of mandating its use, nor is there a reason to. These websites will want to implement this system or one similar to it of their own accord. Here’s why:

- 1) The system will make a website more desirable to users who value their privacy and reputations.

- 2) The system creates a stickiness that will keep users coming back to the social media website that uses it.

- 3) The system does not substantially impede a website's ability to profit from data mining, thus allowing it to promise greater privacy protections to its users without hurting the company’s source of revenue.

Finally, it is important to note that the system is not designed to be 100% effective at addressing all potentially problematic content. The goal is solely to create a process that is easy to implement, easy to understand, and will achieve the socially optimal result in the vast majority of cases.

Model

- 1) A user, P, is presented with preferences when he first uses the system. He can either use the presets chosen by the website or adjust the preferences to his taste. These preferences would determine his default actions for expirations, renewals, notifications, protests, etc.

- 2) P posts content on the website.

- 3) P chooses an expiration date for his content or uses his default expiration settings.

- 4) The content might contain a representation of Q. Q can then be “marked” on the content by P, Q or R. To be “marked” means that Q is alerted that he is implicated in this content and is given the option to be notified when the content comes up for renewal.

- 5) If no one is marked on the content, then P will, in accordance with his preferences, receive a notification 30 days prior to the content’s expiration date. P can then choose to renew the content or do nothing. If P does nothing, the content expires.

- 6) If Q is marked but never opts in for notification, then the content is treated as if no one is marked.

- 7) If Q is marked and opts in for notification, and if P chooses to renew the content, then Q will receive a notification that P chose to renew the content.

- 8) Q can ignore this notification and the content will renew as per P’s decision.

- 9) Alternatively, Q can “protest” P’s decision to renew the content. This means that Q would send a message to P saying that he doesn’t want the content renewed and explaining why. This protest would contain standard options that Q could select for his reasoning, but Q would also be required to compose a personalized message to P.

- 10) P, upon receiving Q’s protest, can choose to move forward with the renewal (with or without modifying the content), halt the public renewal, or do nothing.

- 11) If P does nothing, the content will expire, as per Q’s wishes.

- 12) If P halts the public renewal, the content will still be kept in P’s private archive but will not be viewable to any other users.

- 13) If P chooses to move forward with the renewal (with or without modifying the content), P must then send a response to Q explaining why P chose to renew the content over Q’s protest. This would also be a personalized message.

- 14) Upon receiving P’s response, Q might be satisfied with P’s reasons or modifications. If Q does nothing, the content will renew.

- 15) If Q is partially dissatisfied with P’s response, Q could chose to add an “asterisk” to the content. This would be a discrete message that viewers of the content would see, consisting of Q’s protest to P. The content would renew with the asterisk.

- 16) If Q is substantially dissatisfied with P’s response, Q could appeal the renewal to an adjudication process. This process would determine whether or not the content renews.

Explanation of the Model

Users will set preferences or use default preferences for expiration and notification to streamline decisions. These will help triage content to prevent users from being deluged with notifications.

- For example, presets could allow users to auto-ignore, auto-opt in to notify, or auto-protest based on the identity of the other party. Certain groups might get trusted automatically, while others would be automatically suspect.

- E.g. If your mother marks you in a photo, you know that most of the time you probably don’t need to worry about its content.

- However, because the purpose of the system is to expire valueless content, users would not be allowed to set their preferences to auto-renew their content.

When P chooses an expiration date, P can opt to receive or not receive notice prior to expiration. Presumably, most content, being transitory, will be allowed to expire w/o notice (e.g. status posts, comments, tweets, etc). Thus, only truly substantive content would ever even require subsequent action by P or Q.

Only an actual person depicted by the content would have “standing” to be marked.

- This rule’s efficient application will compensate for its under-inclusivity. Trying to get 100% inclusivity for all affected users or content would make the perfect the enemy of the good.

- The rule will also help prevent large groups from ganging up to harass one person.

Captchas would be used to ensure that only real people will be able to mark content. [9]

Q should not have the ability to prevent expiration. The content belongs to P; if he wants to expire it, that’s his right.

R should not have the ability to prevent either expiration or renewal. Being neither the owner of the content (P) nor someone implicated in the content (Q), a third party should have no rights to dictate what happens or doesn’t happen with the content.

Users could maintain a private archive of their content that will not expire, subject to their preferences. Many users use online social media websites as multimedia diaries of their lives. Users should have the option of permanently retaining all of their own content for private viewing. But they should not be able to keep such information publicly viewable without going through the system.

- Our chief concern is controlling mid-term reputational effects. Thus, if something is private, it cannot substantively affect someone else’s reputation and does not need to be constrained.

No one will be able to search for asterisks. [10] Asterisks serve to explain content that Q finds objectionable. By their very nature, asterisks will be associated with controversial content. If a user could search for an asterisk then these asterisks would serve as homing beacons for the very content that Q wants to disappear in the first place.

The adjudication process might be styled after either the Uniform Domain-Name Resolution Policy (“UDRP”) or eBay’s adjudication process. [11] It is likely that this process would be contracted out to established 3rd parties. Allowing this service to be contracted out to the market will allow different methods to develop competitively to foster innovation and efficiency.

- It should be noted that the adjudication process is the only part of the model that will take a substantive look at the nature of the content.

Concerns and Responses to the Model

1. How do we prevent a deluge of expiration notifications and other notifications from the process?

Concern: The model is likely to create a considerable amount of email notification both for P and for Q. Online social media users are generating content everyday so the flow will be constant. This flood of emails could discourage some walled gardens from implementing the model if they fear their users will abandon their network, because their mailbox is constantly flooded. Are users going to take concerns seriously when they receive so many of them over short periods of time?

Response: Like in every architectural design (both physical and technological), there are tradeoffs. The benefit of the model is that it facilitates interactions and keeps users constantly apprised about content they generate and content generated about them. The con is reflected in the benefit: they are constantly being kept informed, which means they are constantly receiving notifications. Asides from the benefit of being aware, there are some tools users could employ to avoid mailbox flooding.