Urs Gasser shares some thoughts on how we learn to trust new technologies in a time of rapid change:

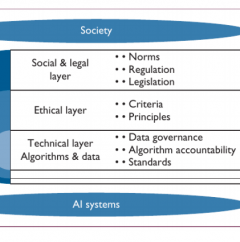

We are witnessing a wave of AI-based technologies that make their way out of the labs into industry- and user-facing applications, and we know from history that trust is an important factor that shapes the adoption of new technology. Given today’s quicksilver AI environment, it seems fair to ask: Do we already have the necessary trust in AI, and if not, how do we create it?

You might also like

-

storyArtificial Worldviews